What is anomaly detection?

Anomaly detection is a critical part of data mining that identifies information or observations that are significantly different from the dataset’s overall pattern of behavior.

Also known as outlier analysis, anomaly detection finds errors like technical bugs and pinpointing changes that could result from human behavior. After gathering enough data to form a baseline, anomalies or data points that deviate from the norm are more clearly visible when they happen.

Being able to find anomalies correctly is essential in many industries. Although some anomalies may be false positives, others signify a larger issue.

Hacking and bank fraud are some of the most commonly identified anomalies in data, wherein unusual behavior is detected using digital forensics software. Many of these systems now use artificial intelligence (AI) to monitor for anomalies around the clock automatically.

Types of anomaly detection

While every industry will have its own set of quantitative data unique to what they do, any information assessed for anomaly detection falls into one of two categories.

- Supervised detection. Previous data is used to train AI-run machines to identify anomalies in similar datasets. This means the machine can understand which patterns to expect, but it can cause issues with anomalies that haven’t been seen before.

- Unsupervised detection. Most businesses don’t have enough data to train AI systems for anomaly detection accurately. Instead, they use unlabeled data sets that the machine can flag when it believes outliers are present without comparing it to an existing dataset. Teams can then manually tell the machine which behavior is normal and which is a true anomaly. Over time, the machine learns to identify these on its own.

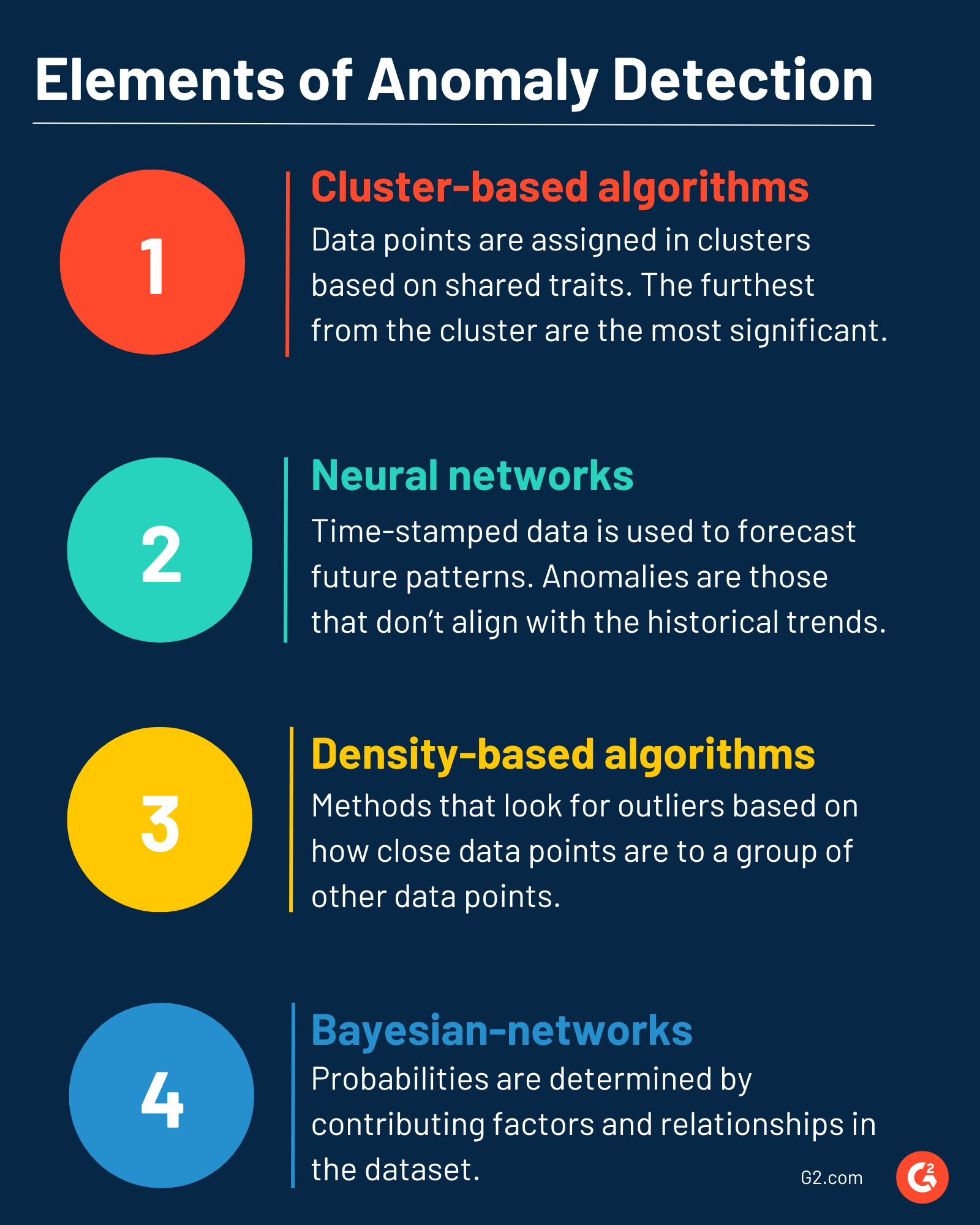

Basic elements of anomaly detection

The detection techniques used to find anomalies will be determined by the type of data used to train the machine, and the organization is continually gathering that.

Some of the most commonly used techniques are:

- Cluster-based algorithms. Data points are assigned in clusters on a chart based on shared traits. Anything that doesn’t fit into a cluster could be an outlier, with those further from the cluster more likely to be an anomaly. The furthest data points from the cluster are the most significant anomalies.

- Neural networks. Time-stamped data forecasts expected future patterns; anomalies don’t align with the historical trends seen in early data. Sequences and points of deviation are often used in this type of detection.

- Density-based algorithms. Like clusters, density-based detection methods look for outliers based on how close data points are to an established group of other data points. Areas of higher density indicate more data points, so anomalies outside this are more notable as they’re separated from the denser group.

- Bayesian-networks. Future forecasting is also important in this technique. Probabilities and likelihoods are determined by contributing factors in the dataset and by finding relationships between data points with the same root cause.

Benefits of anomaly detection

Businesses now operate with thousands of different pieces of data. Keeping track of this level of information manually is impossible, making finding errors more difficult. That’s why anomaly detection is useful, as it can:

- Prevent data breaches or fraud. Without automated detection systems, outliers caused by cybercriminals can easily go undetected. Anomaly detection systems run constantly, scanning for anything unusual and flagging it for review right away.

- Find new opportunities. Not every anomaly is bad. Outliers in certain datasets can point to potential growth avenues, new target audiences, or other performance-enhancing strategies that teams can use to improve their return on investment (ROI) and sales.

- Automate reporting and result analysis. Using traditional reporting methods, anomalies can take significant time to find. When businesses try to achieve certain key performance indicators (KPIs), that time can be costly. Automating many of these systems for anomaly detection means results can be reviewed much faster, so problems can be corrected quickly to meet business goals.

Best practices for anomaly detection

As with any automated system, results can become overwhelming. When first implementing anomaly detection, it’s a good idea to:

- Understand the most effective technique for the type of data assessed. With so many methodologies, selecting something that works well with the kind of data being reviewed is essential. Research this ahead of time to avoid complications.

- Have an established baseline to work from. Even seasonal businesses can find an average pattern with enough data. Knowing what normal behavioral patterns in data is the only way to know which points don’t fit expectations and could be anomalies.

- Implement a plan to address false positives. Manually reviewing possible false positives or using a set of filters can prevent skewed datasets and time wasted on chasing fake anomalies.

- Continually monitor systems for mistakes. Anomaly detection is an ongoing process. The more data the machine uses and learns from, the smarter it becomes and the easier it is to identify outliers. A human should still conduct manual reviews periodically to ensure the machine learns from accurate information and not training on datasets containing errors.

Keep your business data protected 24/7 with automated data loss prevention (DLP) software to identify breaches or leaks.

Holly Landis

Holly Landis is a freelance writer for G2. She also specializes in being a digital marketing consultant, focusing in on-page SEO, copy, and content writing. She works with SMEs and creative businesses that want to be more intentional with their digital strategies and grow organically on channels they own. As a Brit now living in the USA, you'll usually find her drinking copious amounts of tea in her cherished Anne Boleyn mug while watching endless reruns of Parks and Rec.