Digamos que estás enviando por correo electrónico un documento importante a tus colegas.

Muy simple, ¿verdad? Adjuntas el documento a tu correo electrónico y presionas el botón de enviar. Tus compañeros de trabajo reciben casi instantáneamente la información que necesitan.

Ahora, piensa en este intercambio de información a una escala mayor.

Tu empresa trata con proveedores, fabricantes, clientes y vendedores. Comparten todo tipo de información como datos de aprovisionamiento de inventario, información de mantenimiento de productos, planos de construcción, modelos de simulación, datos de planificación de calidad, contratos, documentos comerciales, códigos fuente de programas, y la lista continúa. Y, todos estos datos vienen en diferentes formatos.

¿Cómo normalizas este enorme volumen de datos sin cambiar su significado? Ahí es donde entra el intercambio de datos. El software de intercambio de datos ofrece capacidades de datos como servicio (DaaS) para ayudar a los proveedores y consumidores a compartir y obtener información sin esfuerzo. Como resultado, las empresas pueden reunir inteligencia de mercado y alimentar decisiones basadas en datos con un esfuerzo mínimo.

¿Qué es el intercambio de datos?

El intercambio de datos es el proceso de compartir datos entre empresas, partes interesadas y ecosistemas de datos sin cambiar el significado inherente durante la transmisión. El intercambio de datos transforma conjuntos de datos para simplificar la adquisición de datos y controlar la colaboración segura de datos.

El intercambio de datos asegura una transferencia de datos fluida entre proveedores y consumidores de datos. Los proveedores, sindicadores de datos y corredores comparten o venden datos. Los consumidores recopilan o compran datos de los proveedores de datos.

Una plataforma de intercambio de datos permite a los proveedores y consumidores intercambiar, comercializar, obtener y distribuir datos. Estas plataformas ayudan a los proveedores y consumidores a cumplir con los requisitos legales, de seguridad, técnicos y de cumplimiento.

Importancia del intercambio de datos

Las empresas producen, recopilan y adquieren grandes volúmenes de datos de las operaciones diarias. Sin embargo, estos datos de primera mano son apenas suficientes para tomar decisiones empresariales basadas en nuevas perspectivas. Es entonces cuando las empresas se convierten en consumidores de datos. Utilizan puntos de datos verificables de segunda y tercera parte para llenar vacíos de información, analizar datos y satisfacer necesidades de inteligencia.

Por otro lado, los distribuidores de datos que venden datos no siempre tienen tanta información como necesitan. Utilizan el intercambio de datos en línea para monetizar activos informativos y adquirir datos de otras fuentes. Cuando los datos no son útiles, las empresas los monetizan. La mayoría de las empresas utilizan datos y los venden a otras firmas.

¿Por qué las empresas utilizan el intercambio de datos?

Las empresas utilizan sistemas de intercambio de datos para:

- Mejorar los análisis empresariales, pronósticos y planes

- Descubrir ideas para encontrar clientes potenciales para campañas

- Recopilar datos para enriquecer modelos de aprendizaje automático o estadísticos

- Utilizar datos de clics para personalizar la experiencia del usuario y construir motores de recomendación

- Encontrar datos demográficos, sociales y psicográficos para crear vistas de cliente de 360°

Las empresas valoran el intercambio de datos porque asegura la calidad de los datos, algo que el proceso tradicional de compra y venta de datos a menudo pasa por alto.

Cuando los consumidores de datos compraban conjuntos de datos en el pasado, encontraban montones de registros duplicados. A veces, los datos carecían de regularidad y normalización. Otras veces, los datos contenían registros faltantes, nulos, números inválidos y etiquetas ilegibles. Las soluciones de software de intercambio de datos eliminan estos problemas al permitir a los compradores previsualizar los datos y abordar problemas de calidad antes de comprarlos.

El intercambio de datos también resuelve problemas de descubrimiento de datos. Anteriormente, las organizaciones tenían que navegar por innumerables sitios web antes de adquirir datos. A eso se suma la gran odisea de las negociaciones de precios, la firma de contratos, la limpieza de datos y la integración. No es una gran ecuación para un buen negocio.

Los sistemas de intercambio de datos hacen que todo el proceso sea sin esfuerzo para consumidores y proveedores. Los consumidores de datos pueden utilizar búsquedas filtradas por múltiples criterios, herramientas de muestreo y visualización de datos para encontrar lo que están buscando.

¿Quién utiliza el intercambio de datos?

Proveedores y consumidores de datos que utilizan soluciones de software de intercambio de datos:

- Organizaciones que mejoran sus decisiones basadas en datos

- Cadena de suministro, operaciones y logística que buscan ideas accionables

- Marketers que necesitan datos accionables sobre sus audiencias objetivo

- Gerentes de proyectos que fomentan una mejor colaboración de datos entre equipos

- Agencias que buscan audiencias e ideas valiosas para campañas

- Publicadores que intentan entender la demografía de los lectores y aumentar las conversiones

- Gerentes de soporte técnico que necesitan identificar las necesidades de los usuarios de software y facilitar la capacitación adecuada

¿Quieres aprender más sobre Plataformas de Intercambio de Datos? Explora los productos de Plataformas de Intercambio de Datos.

Historia del intercambio de datos

El humilde comienzo del intercambio de datos tal como lo conocemos hoy comenzó en la década de 1960 cuando IBM y General Electronics (GE) inventaron bases de datos. La transferencia de datos entre bases de datos no fue necesaria hasta la década de 1970, cuando las bases de datos finalmente reunieron suficientes datos.

CSV

La necesidad de transferir datos llevó al compilador IBM Fortran a soportar el formato de valores separados por comas (CSV) en 1972. Las empresas utilizaban CSV para recopilar datos en tablas e importarlos a otra base de datos.

CSV sigue siendo el método de distribución de datos más común incluso hoy en día. Grandes corporaciones, organismos gubernamentales e instituciones académicas utilizan CSV para distribuir datos en internet.

XML y JSON

Pronto, las empresas se dieron cuenta de que no estaban intercambiando toda la tabla de información. En cambio, proporcionaban registros limitados a los usuarios finales. Esta necesidad de proporcionar acceso a un puñado de registros los llevó a utilizar interfaces de programación de aplicaciones (APIs) que conectaban aplicaciones ligeras.

Las APIs facilitaron el intercambio de datos con pequeñas colecciones de información jerárquica. El proceso de envío de datos con APIs requería dos llamadas API: una para el objeto base y otra para la lista de etiquetas de una base de datos relacional. Este problema llevó a la invención del lenguaje de marcado extensible (XML) en 1998 y la notación de objetos serializados de Javascript (JSON) en 2001.

Las empresas rápidamente se alejaron de XML porque creaba etiquetas más grandes que la carga útil de datos. JSON solo podía representar pares clave-valor y matrices. Como resultado, las APIs comenzaron a usar JSON para conectar aplicaciones.

Hoy en día, las empresas utilizan herramientas de gestión de APIs para monitorear APIs y facilitar el intercambio de datos.

Sistema de Control de Código Fuente

El científico informático Marc Rochkind inventó un sistema de control de versiones llamado Sistema de Control de Código Fuente (SCCS) mientras trabajaba en Bell Labs en 1972. Múltiples autores de código utilizaron SCCS y descubrieron que podían colaborar eficientemente utilizando características de control de versiones como diferencias, fusiones y ramas.

Antes de SCSS, las empresas dependían de la compilación manual e integración del trabajo de todos en el código. La colaboración en el mismo código se volvió sin esfuerzo con SCSS.

CVS

Las organizaciones utilizaron sistemas de control de versiones propietarios hasta que el científico informático y profesor Dick Grune lanzó Sistemas de Versiones Concurrentes (CVS) en 1986. La mayoría de los proyectos de código abierto utilizaron CVS para compartir código utilizando formatos libres y abiertos.

En 2005, el ingeniero de software finlandés Linus Torvalds trasladó su proyecto de código abierto a Git, y las empresas de productos lo siguieron.

Git y Github

Utilizando formatos distribuidos, Git facilitó la colaboración en el código fuente. La plataforma almacenaba todas las versiones del código localmente, y las empresas solo necesitaban sincronizar los cambios del servidor remoto. El fácil control de versiones permitió a las empresas manejar grandes volúmenes de órdenes de operaciones de diferencia, rama y fusión más rápidamente.

A diferencia de otros sistemas de control de versiones, Git utilizó la estructura de gráfico acíclico dirigido de Merkle (DAG) para permitir que las ramas fueran punteros a confirmaciones. Con prácticamente ramas ilimitadas, Git facilitó que las personas colaboraran y trabajaran en el mismo código.

El lanzamiento de Github en 2008 mejoró aún más la colaboración en el código fuente, resultando en muchos proyectos de código abierto.

Características del intercambio de datos

Los sistemas de intercambio de datos ofrecen las siguientes características para ayudar a las empresas a obtener datos y derivar ideas.

Normalización de datos

La normalización de datos organiza datos similares en registros para generar datos limpios. El proceso de normalización asegura el almacenamiento lógico de datos, minimiza los errores de modificación de datos, simplifica las consultas y elimina la redundancia y los datos no estructurados. Esta característica permite a las empresas estandarizar diferentes entradas de información, incluidos números de teléfono, direcciones y nombres de contacto.

La normalización utiliza formas normales para mantener la integridad de la base de datos y verificar las dependencias entre atributos y relaciones.

Formas normales comunes:

Las empresas generalmente utilizan estas tres formas normales para normalizar datos.

- Primera forma normal (1NF) considera un solo valor de celda y registro para eliminar entradas repetidas de un grupo.

- Segunda forma normal (2NF) satisface 1NF y reubica subgrupos de datos de múltiples filas a una nueva tabla.

- Tercera forma normal (3NF) asegura que no haya dependencia entre atributos que no son clave primaria, además de cumplir con 1NF y 2NF.

La mayoría de las bases de datos relacionales no suelen requerir más de 3NF para normalizar datos. Sin embargo, las empresas utilizan la cuarta forma normal (4NF), la quinta forma normal (5NF) y la sexta forma normal (6NF) para manejar conjuntos de datos complejos.

DaaS

Las soluciones de intercambio de datos utilizan el modelo de datos como servicio (DaaS) para almacenar datos, procesarlos y ofrecer servicios de análisis. Las empresas recurren a la entrega de servicios en la nube para mejorar la agilidad, mejorar la funcionalidad, configurarse rápidamente, automatizar el mantenimiento y ahorrar costos.

DaaS funciona de manera similar a SaaS pero no vio una adopción generalizada hasta hace poco. Originalmente, los servicios de computación en la nube manejaban el alojamiento de aplicaciones y el almacenamiento de datos en lugar de la integración, análisis y procesamiento de datos. Hoy en día, el almacenamiento en la nube de bajo costo facilita que las plataformas en la nube gestionen y procesen datos a gran escala.

Gestión de datos

La gestión de datos, el proceso de recopilar, organizar, transformar, almacenar y proteger datos, comienza con la adquisición de datos en el centro de control. Una vez que adquieres datos, continúas con los procesos subsiguientes como la preparación de datos, conversión, catalogación y modelado. Estos pasos ayudan a que los datos cumplan con los objetivos de análisis de datos.

La gestión eficiente de datos optimiza el uso de datos en equipos y organizaciones. Además, es crucial para cumplir con los requisitos de políticas y regulaciones.

Intercambio dinámico de datos

El intercambio dinámico de datos (DDE) transfiere datos con un protocolo de mensajería. DDE comparte datos entre aplicaciones utilizando varios formatos de datos. Las plataformas de intercambio de datos remotas que utilizan intercambio dinámico de datos te ayudan a actualizar aplicaciones basadas en la disponibilidad de nuevos datos.

DDE utiliza modelos de cliente y servidor junto con memoria compartida para intercambiar información. En este modelo, el cliente solicita y la aplicación ofrece información. Puedes usar DDE más de una vez para intercambiar datos.

Automatización del intercambio de datos

La automatización del intercambio de datos ayuda a las empresas a ahorrar tiempo, simplificar el procesamiento de datos y ejecutar tareas del ciclo de vida de los datos más rápido. Los sistemas de software de intercambio de datos que cuentan con automatización emulan acciones manuales para hacer los procesos más eficientes.

Tipos de intercambio de datos

A continuación se presentan los cuatro tipos de intercambio de datos, dependiendo de las relaciones de transferencia de datos entre consumidores y proveedores de datos.

1. Intercambio de datos entre pares es el intercambio directo de datos entre dos empresas diferentes o dos divisiones dentro de la misma empresa. Por ejemplo, una gran corporación con múltiples almacenes de datos puede utilizar el intercambio de datos entre pares para compartir subconjuntos de datos entre departamentos.

2. Intercambio de datos privado ocurre cuando dos empresas comparten datos utilizando un canal seguro. Ejemplos comunes incluyen el intercambio de datos específico de la industria entre usuarios. Del mismo modo, cuando una empresa comparte datos con proveedores que los comparten con clientes, se conoce como intercambio de datos privado.

Este tipo de intercambio de datos utiliza transferencia de estado representacional (REST) API, protocolo de acceso a objetos simples (SOAP) servicio web, cola de mensajes, protocolo de transferencia de archivos (FTP), intercambio electrónico de datos (EDI) o tecnología de puerta de enlace de empresa a empresa (B2B).

3. Intercambio electrónico de datos opera a través de la nube. Este tipo de intercambio de datos protege los datos con contraseñas y puede hacer que los datos estén disponibles para su descarga.

4. Mercado de datos es un intercambio de datos público abierto a empresas dispuestas a consumir o suministrar datos. Por ejemplo, Amazon Web Services (AWS) es un mercado de datos global que atiende a diversas industrias y funciones. También encontrarás mercados de datos de nicho que ofrecen servicios de intercambio de datos financieros o de salud a consumidores y proveedores.

Formatos de intercambio de datos

Algunos de los formatos comunes que las empresas utilizan para intercambiar datos incluyen:

- CSV

- XML

- JSON

- INTERLIS

- Apache Parquet

- Formato de archivo de cuadrícula GMT

- Lenguaje de marcado generalizado (GML)

- Otro lenguaje de marcado (YAML)

- Marco de descripción de recursos (RDF)

- Lenguaje de objetos basado en expresiones relativas (REBOL)

- Cualquier transporte sobre conmutación de etiquetas multiprotocolo (MPL) (ATOM)

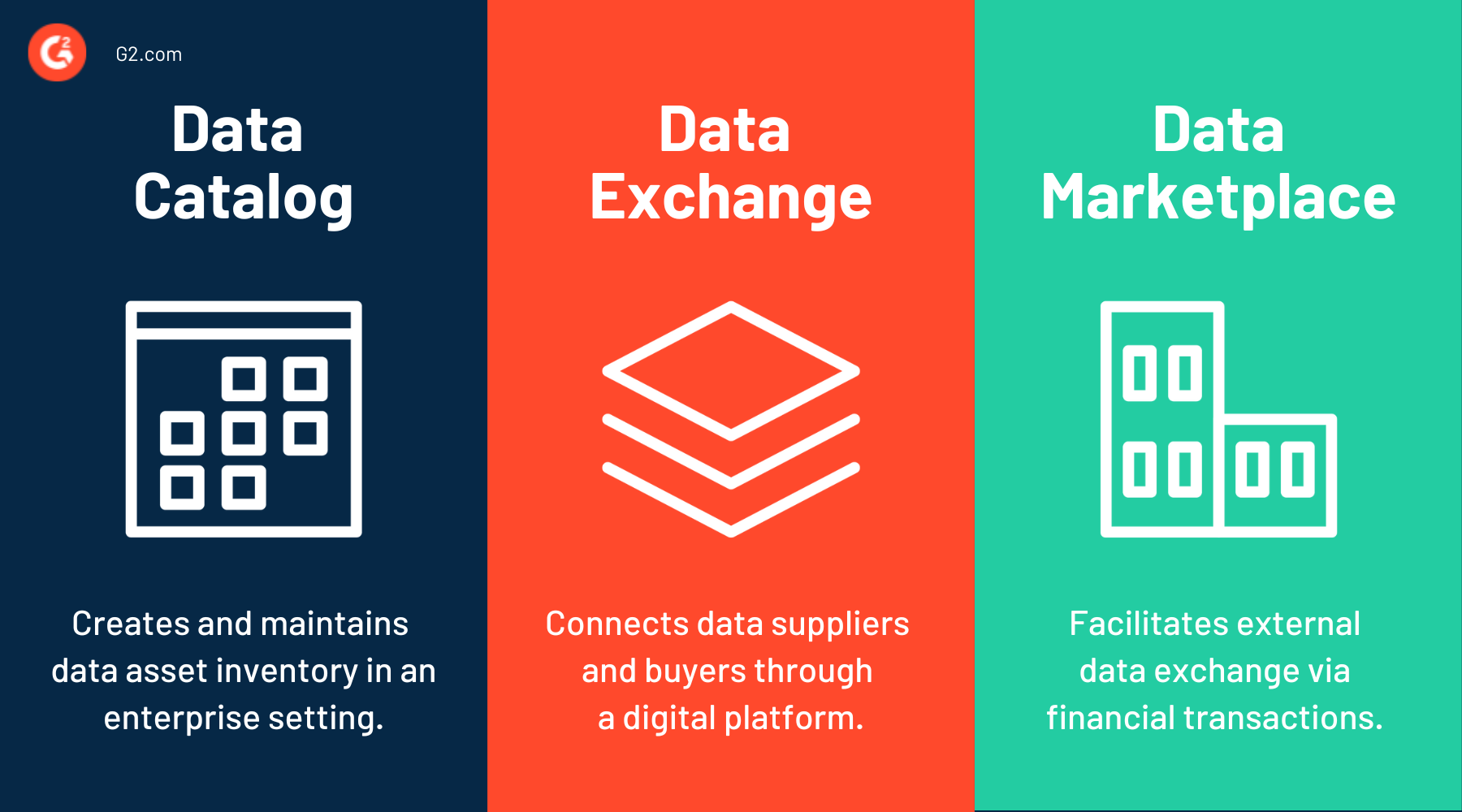

Catálogo de datos vs. intercambio de datos vs. mercado de datos

Un catálogo de datos crea y mantiene un inventario de activos de datos en un entorno empresarial. Los analistas de negocios, ingenieros de datos y científicos utilizan catálogos de datos para extraer valor empresarial de conjuntos de datos relevantes.

Para automatizar la catalogación de datos, las herramientas de catálogo de datos de aprendizaje automático utilizan consultas en lenguaje natural y soluciones de enmascaramiento de datos, permitiendo un descubrimiento, ingestión, enriquecimiento y traducción de metadatos seguros y eficientes.

Las plataformas de intercambio de datos conectan a proveedores y compradores de datos a través de una interfaz digital de datos que simplifica cómo las empresas encuentran, utilizan y gestionan datos relevantes. Las interacciones de intercambio de datos pueden ser transaccionales o colaborativas.

Un mercado de datos facilita el intercambio de datos externos a través de transacciones financieras. Los mercados de datos permiten a las empresas descubrir, publicar, licenciar y distribuir datos. Todos los mercados de datos son intercambios de datos, pero los mercados no admiten casos de uso no financieros.

¿Cómo funciona el intercambio de datos?

Las soluciones de software de intercambio de datos reúnen a vendedores y compradores. Esta colaboración ocurre en los siguientes pasos.

- Acuerdos de socios: Una vez que los compradores saben qué datos quieren, firman acuerdos o contratos con los vendedores. Estos acuerdos definen los protocolos de intercambio de datos, las pautas de uso y otros principios de colaboración.

- Configuración del cliente de nodo: Dependiendo de las necesidades de los consumidores, los proveedores configuran nodos para compartir datos a través de la red. Estos clientes de nodo permiten a los consumidores solicitar y recibir datos a través de un canal seguro. Algunas empresas solo utilizan nodos para automatizar el monitoreo de solicitudes de datos.

- Estandarización de datos: Los proveedores estandarizan y enriquecen los datos utilizando formatos de datos acordados.

- Intercambio de información: Los proveedores de datos comparten datos utilizando clientes de nodo y los compradores reciben los datos.

Patrones de intercambio de datos

Los patrones de intercambio de datos combinan formato de datos, protocolos de comunicación y patrones arquitectónicos para facilitar el intercambio de datos. Desglosamos algunos de los patrones de intercambio de datos más comunes.

API

Las APIs utilizan el protocolo de transferencia de hipertexto (HTTP) y servicios web para comunicarse entre aplicaciones. Los servicios web como los siguientes estandarizan la provisión de interoperabilidad entre aplicaciones.

- El protocolo estandarizado SOAP utiliza HTTP y un protocolo simple de transferencia de correo (SMTP) para enviar mensajes. El Consorcio World Wide Web (W3C) desarrolla y mantiene las especificaciones estándar de SOAP.

- REST estilo arquitectónico ofrece servicio web RESTful con un conjunto de pautas.

- GraphQL o herramientas similares de arquitectura de diseño de API cuentan con lenguaje de consulta y manipulación junto con tiempo de ejecución asociado.

ETL

Para leer y escribir datos, las aplicaciones que transfieren datos necesitan conectarse a otras bases de datos. Las herramientas de extracción, transformación y carga (ETL) mejoran las conexiones de bases de datos con agrupación de datos, transformación y programación.

Las soluciones ETL ayudan a las empresas a reunir datos de múltiples bases de datos en un único repositorio para formateo y preparación de análisis de datos. Este repositorio de datos unificado es clave para simplificar el análisis y el procesamiento de datos.

Transferencia de archivos

El proceso de transferencia de archivos utiliza una conexión de red o internet para almacenar y mover datos de un dispositivo a otro. Las soluciones de intercambio de datos utilizan la transferencia de archivos para compartir, transmitir o transferir objetos de datos lógicos entre usuarios locales y remotos. JSON, XML y CSV son formatos de archivo comunes utilizados en el proceso de intercambio de datos.

Llamada a procedimiento remoto

La computación distribuida utiliza una llamada a procedimiento remoto (RPC) para traducir y enviar mensajes entre aplicaciones basadas en cliente-servidor. RPC facilita las comunicaciones punto a punto durante el intercambio de datos.

Un protocolo RPC solicita a un servidor remoto que ejecute procedimientos específicos basados en los parámetros del cliente. Una vez que el servidor remoto responde, RPC transfiere los resultados al entorno de llamada.

Mensajería basada en eventos con intermediación

La mensajería basada en eventos con intermediación utiliza software de middleware para entregar mensajes de datos. En este proceso, diferentes componentes técnicos gestionan la cola y el almacenamiento en caché. Se basa en un motor de reglas de negocio para gestionar servicios de publicación y suscripción.

Transmisión de datos

La transmisión de datos es el proceso de recibir un flujo continuo de datos o alimentación de diferentes fuentes. Las herramientas de intercambio de datos utilizan la transmisión de datos para recibir secuencias de datos y actualizar métricas para cada punto de datos que llega. Este patrón de intercambio de datos es adecuado para actividades de monitoreo y respuesta en tiempo real.

Considera tus necesidades locales y empresariales antes de elegir un patrón de intercambio de datos.

El uso de estándares universales de intercambio de datos permite un acceso e integración de datos sin problemas en todos los niveles de atención médica.

Las instalaciones de salud utilizan motores de integración de atención médica para asegurar la accesibilidad de los registros de salud electrónicos (EHR), reducir silos de datos dispares y lograr una mejor compatibilidad y cumplimiento.

Estándares de intercambio de datos de salud

El Consorcio de Estándares de Intercambio de Datos Clínicos (CDISC) aplica los siguientes estándares para compartir datos estructurados a través de sistemas de información.

- Registro de ensayos clínicos (CTR)-XML aprovecha la solución de 'escribir una vez, usar muchas veces' de usar un solo archivo XML para múltiples presentaciones de ensayos clínicos.

- Modelo de datos operativos (ODM)-XML es un formato neutral al proveedor que facilita el intercambio y archivo de datos regulatoriamente compatible con metadatos, datos de referencia e información de auditoría. Las herramientas de captura de datos electrónicos utilizan frecuentemente ODM-XML para informes de casos.

- Modelo de diseño de estudio/ensayo en XML (SDM-XML) utiliza tres submódulos (estructura, flujo de trabajo y tiempo) para ofrecer descripciones de diseño de estudio clínico legibles por máquina.

- Define-XML describe la estructura de metadatos tabulares con metadatos de conjuntos de datos.

- Dataset-XML utiliza Define-XML para soportar el intercambio de conjuntos de datos.

- Marco de descripción de recursos (RDF) Los estándares CDISC ofrecen una vista de datos enlazados de los estándares CDISC.

- Modelo de datos de laboratorio (LAB) proporciona un modelo estándar para la adquisición e intercambio de datos de laboratorio.

Marco de intercambio de datos

Un marco de intercambio de datos facilita la transferencia de datos entre sistemas. Define la lógica necesaria para leer datos de archivos fuente, transformar datos en formatos compatibles y compartir datos transformados con el sistema de destino. Para facilitar este proceso, los desarrolladores generalmente conectan sistemas de terceros y de destino con el marco.

Los marcos de intercambio de datos cuentan con las siguientes funcionalidades para ayudar a los consumidores y proveedores de datos a interactuar.

- Catálogo buscable simplifica la búsqueda de activos de datos utilizando la descripción del conjunto de datos, incluyendo el número de registros, tipo de archivo, precios, estadísticas de perfil y calificaciones. Los consumidores de datos buscan en estos catálogos para encontrar conjuntos de datos adecuados y evaluar la calidad de los datos de muestra.

- Gestión de activos te permite cargar, gestionar y publicar activos de datos. Los proveedores de datos utilizan esta funcionalidad para especificar licencias de datos, derechos de acceso y gestionar inventario.

- Control de acceso ayuda a los proveedores de datos a definir reglas de acceso a activos de datos. Por ejemplo, un proveedor puede restringir el acceso al conjunto de datos hasta la finalización del pago o acuerdo. Algunas capas de intercambio de datos también ofrecen intercambio de claves de cifrado para la entrega de archivos.

- Transferencia de datos es el proceso que los proveedores utilizan para compartir datos con los consumidores. Los métodos comunes de transferencia de datos incluyen transferencia de archivos, intercambio de datos multi-tenant y APIs. La transferencia basada en la nube mantiene archivos y simplifica el acceso a datos con almacenamiento de objetos. Por otro lado, el intercambio de datos multi-tenant requiere que los proveedores y consumidores utilicen las mismas plataformas de gestión de datos (DMPs) para transparencia.

- Gestión de suscripciones simplifica las ofertas de suscripción de activos de datos para los proveedores de datos. Algunos intercambios de datos también ofrecen una función de "trae tu propia suscripción" (BYOS) que conecta diferentes suscripciones a través de tokens.

- Gestión de transacciones ofrece transacciones de pago y procesamiento de pagos a través de tarjetas de crédito, transferencias bancarias y facturación de cuentas. Los consumidores de datos rastrean compras y suscripciones, se mantienen actualizados sobre los términos de renovación y modifican suscripciones utilizando módulos de gestión de transacciones.

- Gestión de cuentas recopila detalles relacionados con usuarios, compradores, vendedores, así como mecanismos de pago, información de facturación y actividad de la cuenta.

- Administración y operadores de intercambio de datos monitorean actividades de usuarios y solucionan problemas.

- Colaboración ofrece un área segura para que proveedores y consumidores trabajen juntos en conjuntos de datos.

- Enriquecimiento de datos mejora la calidad con estandarización de datos, verificación de direcciones, deduplicación, fusión de archivos, validación y limpieza de datos.

- Compartición selectiva permite la configuración de conjuntos de datos para consumidores selectos.

- Mapeo de datos recomienda datos suplementarios para un mayor enriquecimiento.

- Intercambio de datos multi-tenant elimina los dolores de cabeza tradicionales del intercambio de datos de reemplazar FTP, y copiar y mover datos.

- Kit de desarrollo de software de conectores (SDK) crea conectores personalizados para que los proveedores de intercambio de datos accedan a otras plataformas de datos.

- Datos agregados derivados permite a los consumidores ejecutar funciones definidas por el usuario (UDFs) y recibir salida agregada. Los proveedores generalmente ofrecen esta funcionalidad cuando no quieren que los consumidores tengan acceso a datos crudos sensibles.

- Incorporación mejorada simplifica la incorporación de proveedores con evaluación de cumplimiento de datos del proveedor.

- Alertas notifican a los consumidores cuando una nueva publicación de datos coincide con lo que están buscando.

- Gestión de canalizaciones combina e integra antes de entregar datos de terceros a los usuarios finales.

- Informes mejorados muestra el rendimiento de ventas de intercambio de datos y ayuda a los proveedores a encontrar ideas para dirigirse a los compradores adecuados.

- Productos de datos personalizados mezclan, segmentan e ingenian datos para crear productos de datos adecuados para los consumidores.

- Prohibición de cambio de custodia previene la violación de términos de licencia con vista previa de datos sensibles y pruebas.

La mayoría de las soluciones de intercambio de datos combinan las características anteriores para crear transacciones fáciles y compatibles entre compradores y vendedores de datos.

Beneficios del intercambio de datos

Ya sea que tu empresa quiera descomponer silos de datos, gobernar el acceso a datos o compartir datos de manera segura con clientes, el software de intercambio de datos tiene muchos beneficios para ti.

- Simplifica la compra y venta de datos. Encontrar datos de terceros creíbles ha sido doloroso para los consumidores de datos. Y considera los desafíos de la negociación de precios, la evaluación de datos y la integración. Los sistemas de intercambio de datos hacen que sea sin esfuerzo para los proveedores de datos vender datos y para los compradores comprarlos.

- Facilita la obtención de datos para obtener ideas. Los intercambios de datos proporcionan un acceso más rápido a los datos para las empresas que buscan tomar decisiones cruciales basadas en datos. Esta facilidad de acceso ayuda a las empresas a aumentar los ingresos y mejorar los pronósticos con modelos de aprendizaje automático.

- Optimiza las oportunidades de monetización de datos. Las empresas que venden datos tradicionalmente dependían de un intermediario para encontrar compradores adecuados. Los intercambios de datos significan que los vendedores venden datos en sus propios términos con una plataforma fácilmente accesible.

- Facilita la comercialización de datos. El intercambio de datos ayuda a los originadores y adquirentes de datos a construir un ecosistema que beneficia a ambas partes. Los intercambios de datos ayudan a los compradores de datos a utilizar las nuevas ideas encontradas para tomar movimientos estratégicos mientras brindan a los vendedores oportunidades para crear nuevas fuentes de ingresos.

- Mejora la calidad de los datos y minimiza los gastos desperdiciados. Los intercambios de datos te ayudan a acceder a datos confiables y eliminar bots de datos para que no pierdas tiempo en pistas falsas. Además, el software de intercambio de datos ofrece datos precisos para una segmentación correcta, lo que lleva a resultados empresariales más exitosos.

Desafíos del intercambio de datos

El intercambio de datos resuelve algunos problemas y crea algunos. A continuación se presentan algunos de los problemas comunes que enfrentan las empresas con el intercambio de datos.

- Requiere una política de cumplimiento de datos robusta. Sin ella, apenas puedes sincronizar los sistemas de gestión de datos. Las reglas de cumplimiento ayudan a las empresas a definir marcos de gestión de datos para rastrear qué datos comparten y con quién. Estos marcos facilitan las aplicaciones de control de acceso a datos para los equipos de ingeniería de datos.

- Necesita suficientes proveedores y consumidores. Las plataformas de intercambio de datos sin suficientes consumidores encuentran difícil alcanzar su máximo potencial. Los proveedores permanecen escépticos para listar sus empresas en estas plataformas. Quizás por eso muchas soluciones en la nube con capacidades de intercambio de datos ayudan a los sistemas a ganar compradores y vendedores.

- Depende de la integración y validación de datos. Los consumidores de datos no pueden encontrar ideas a menos que integren los datos con herramientas internas de gestión de datos. Esta integración requiere que el software de intercambio de datos pueda validar, limpiar y formatear datos crudos en un formato legible.

- Necesita cierta experiencia técnica. Las empresas no pueden navegar por las soluciones de intercambio de datos sin saber cómo empaquetar, filtrar o validar datos.

- Limita la capacidad de filtrado de datos. Los intercambios de datos no permiten a los compradores elegir exactamente lo que necesitan. Los adquirentes de datos no pueden crear o comprar conjuntos de datos precisos según sus preferencias.

Consideraciones sobre el enfoque de intercambio de datos

No existe un enfoque de intercambio de datos único que todas las empresas puedan utilizar. Cada método tiene sus pros y contras, pero ten en cuenta estos puntos al elegir el enfoque de intercambio de datos para tu empresa.

- La complejidad de los datos te dice si necesitas acceso directo a la base de datos o no. Por ejemplo, si no tienes acceso a componentes de entidad de datos específicos, estarás mejor con acceso directo. Por otro lado, las APIs REST requieren múltiples llamadas y codificación para construir relaciones entre elementos de datos. También puedes usar JSON y XML para modelos de datos más complejos.

- La frecuencia de actualización de datos revela si tienes que reemplazar conjuntos de datos regularmente. Los métodos de APIs y sistemas de mensajería aseguran una mejor resincronización en caso de grandes actualizaciones de datos.

- El tamaño del conjunto de datos determina si necesitarás una conexión directa a la base de datos o transferencia de archivos para optimizar el rendimiento. También puedes buscar formas de mejorar el rendimiento al enviar datos a través de REST u otras APIs.

- Las versiones o esquemas de datos también te ayudan a elegir entre API u otros protocolos de intercambio de datos. Por ejemplo, las APIs no son ideales para representar diferentes formatos de datos. Si tus aplicaciones necesitan datos en varias versiones, estarás mejor con otro protocolo de intercambio de datos.

- Los controles de seguridad de datos te guían hacia el mejor enfoque de intercambio de datos. Por ejemplo, es posible que necesites diseñar APIs para requerir claves, configurar servidores web o establecer controles de seguridad del sistema de gestión de bases de datos (DBMS) para proteger los datos.

- La dificultad de transformación de datos te dice qué necesitas para mover datos. Necesitas una conexión directa a la base de datos y herramientas ETL para una transformación extensa con reglas complejas. Además, evalúa la complejidad de la transformación para ver si las plataformas de gestión de APIs pueden ser de alguna utilidad.

- El tipo de conexión es otra decisión que debes tomar antes de elegir un enfoque. Los protocolos de corta duración se adaptan a una acción específica o serie de acciones, mientras que los protocolos de larga duración mantienen las conexiones abiertas indefinidamente. Considera los requisitos del usuario final al elegir la persistencia de la conexión.

Las organizaciones exitosas también consideran los objetivos organizacionales más amplios antes de tomar decisiones sobre los requisitos para proyectos y aplicaciones específicos. Colaboran y coordinan enfoques para evitar conflictos e inconsistencias de datos entre equipos.

¿Qué necesitas considerar antes de realizar un intercambio de datos?

- Estrategia de gobernanza de datos

- Consentimiento del usuario para compartir datos

- Gestión de roles y acceso de usuarios

- Licencias de datos y acuerdos legales

- Requisitos técnicos de intercambio de datos

- Términos de la plataforma de software de salida acordados para el intercambio de datos

Mejores prácticas de diseño de intercambio de datos

Un intercambio de datos bien implementado requiere una configuración y sincronización de datos correctas. Confía en las siguientes mejores prácticas para diseñar procesos de intercambio de datos con precisión y validar datos a lo largo del ciclo de implementación.

- Verifica el registro de esquemas XML antes de crear un nuevo esquema.

- Sigue las reglas de diseño de la red de intercambio y los estándares de diseño de esquemas.

- Divide grupos de datos lógicos en archivos de esquema separados.

- Usa restricciones de esquema de manera efectiva para asegurar la compatibilidad con tu base de datos objetivo.

- Minimiza los campos requeridos y úsalos solo cuando sea necesario.

- Usa conjuntos de resultados de conteo, lista o detallados para facilitar la sincronización de datos.

- Prohíbe transacciones de datos grandes durante horas no laborables.

- Aprovecha los métodos asincrónicos para conjuntos de datos grandes.

- Preprocesa las solicitudes para evaluar el impacto del nodo.

- Simplifica la conversión de datos relacionales a XML con preparación de datos.

- Elige un diseño de esquema flexible para optimizar las opciones de retorno de datos.

- Limita las opciones de parámetros de consulta para evitar conjuntos de datos grandes.

- Comprime archivos para limitar el tamaño de la transmisión de datos.

- Usa la diferenciación de datos para identificar cambios desde la última transmisión de datos.

- Elige una convención de nomenclatura de servicios de datos simple y flexible.

- Documenta los parámetros del servicio de datos antes del intercambio de datos.

Software de intercambio de datos

El software de intercambio de datos se utiliza para compartir y transmitir datos sin cambiar su significado.

Una solución de intercambio de datos debe hacer lo siguiente para cumplir con los requisitos de inclusión en la categoría de intercambio de datos:

- Compartir datos sin alterar su significado

- Normalizar datos para facilitar su consumo

- Ofrecer servicio de adquisición de datos de mercado como servicio

- Integrarse con otras soluciones de datos para facilitar el intercambio y análisis

*A continuación se presentan las cinco principales plataformas de intercambio de datos según los datos de G2 recopilados el 18 de julio de 2022. Algunas reseñas pueden estar editadas para mayor claridad.

1. PartnerLinQ

PartnerLinQ es una plataforma de visibilidad de la cadena de suministro que optimiza la visibilidad y conectividad de datos. Esta plataforma cuenta con capacidades de integración de intercambio electrónico de datos (EDI), no EDI y API para conectar múltiples redes de suministro, mercados, análisis en tiempo real y sistemas centrales.

Lo que les gusta a los usuarios:

“Esta plataforma sigue siendo una de las mejores plataformas de mapeo de datos. La configuración es tan ideal, mejorando la gran gestión de problemas de la cadena de suministro. El diseño de la interfaz es tan marginal, mejorando el alto rendimiento. El soporte ofrecido a los usuarios está justo en el punto.”

– Reseña de PartnerLinQ, Chris J.

Lo que no les gusta a los usuarios:

“El precio es caro, creo. Y un ligero desarrollo de análisis sería más útil.”

– Reseña de PartnerLinQ, Rashad G.

2. Crunchbase

Crunchbase es un proveedor líder de soluciones de prospección e investigación. Las empresas, equipos de ventas e inversores utilizan esta plataforma para encontrar nuevas oportunidades de negocio.

Lo que les gusta a los usuarios:

“Lo más útil de Crunchbase son los potentes filtros que puedes usar para crear listas súper dirigidas de empresas a las que deseas contactar para futuras colaboraciones.”

– Reseña de Crunchbase, Aaron H.

Lo que no les gusta a los usuarios:

“El único problema que encontré fue que si usas la función de consulta del sitio web en lugar de la API, obtendrás datos relativamente desordenados que necesitarás limpiar antes de procesarlos correctamente. Este problema se puede eludir si usas la API, pero necesitarás conocimientos básicos de JSON.”

– Reseña de Crunchbase, Kasra B.

3. Flatfile

Flatfile es una plataforma de incorporación de datos que permite a las empresas importar datos limpios y listos para usar más rápido. Esta plataforma automatiza las recomendaciones de coincidencia de columnas y te permite establecer modelos de datos objetivo para la validación de datos.

Lo que les gusta a los usuarios:

“Flatfile es una herramienta de importación poderosa que simplemente funciona. Tiene todas las características que esperarías de un importador más aquellas que no considerarías inicialmente. Para los desarrolladores, su API está bien documentada y su soporte siempre estuvo disponible para discutir enfoques. Hemos hecho de Flatfile una parte crítica de nuestro proceso de incorporación y ha funcionado muy bien.”

– Reseña de Flatfile, Ryan F.

Lo que no les gusta a los usuarios:

“El único problema menor es que la versión del lado del cliente no es tan completa como la versión que envía datos al backend de Flatfile, lo que significa que la coincidencia de columnas no es tan inteligente. Pero esto es realmente menor: recomendaría encarecidamente Flatfile.”

– Reseña de Flatfile, Rob C.

4. AWS Data Exchange

AWS Data Exchange simplifica cómo las empresas utilizan la nube para encontrar datos de terceros.

Lo que les gusta a los usuarios:

“Es impresionante encontrar cientos de productos de datos comerciales de proveedores de datos líderes en categorías como retail, servicios financieros, atención médica y más.”

– Reseña de AWS Data Exchange, Ahmed I.

Lo que no les gusta a los usuarios:

“Los precios de suscripción son costosos y se vuelve muy difícil gestionar el presupuesto.”

– Reseña de AWS Data Exchange, Mohammad S.

5. Explorium

Explorium es una plataforma de ciencia de datos que conecta miles de fuentes de datos externas utilizando descubrimiento automático de datos e ingeniería de características. Las empresas utilizan esta plataforma para adquirir datos y hacer predicciones que impulsan decisiones empresariales.

Lo que les gusta a los usuarios:

“La riqueza y amplitud de los datos es increíble. Realmente me gusta el acceso instantáneo a los datos externos más útiles y confiables. Nos ayuda a proporcionar un mejor servicio al cliente porque es la información que necesitamos para tomar decisiones más rápidas y mejores. La plataforma es muy fácil de usar y extremadamente versátil.”

– Reseña de Explorium, Ishi N.

Lo que no les gusta a los usuarios:

“Desearía que tuvieran más fuentes de datos en el tiempo.”

– Reseña de Explorium, Noa L.

Armoniza la gobernanza de datos maestros en todos los dominios empresariales

Cuando estés listo para sincronizar herramientas, procesos y aplicaciones en toda la empresa con una única fuente de verdad (SSOT), deja que las plataformas de intercambio de datos te liberen de los silos de datos. Descompartimenta tus ideas y toma mejores decisiones basadas en datos.

Aprovecha la gestión de datos maestros (MDM) para crear una vista confiable de los datos y lograr eficiencia operativa.

Sudipto Paul

Sudipto Paul is a former SEO Content Manager at G2 in India. These days, he helps B2B SaaS companies grow their organic visibility and referral traffic from LLMs with data-driven SEO content strategies. He also runs Content Strategy Insider, a newsletter where he regularly breaks down his insights on content and search. Want to connect? Say hi to him on LinkedIn.