For decades, researchers and experts have mulled over how artificial intelligence can turn tables for the tech industry.

In 1956, John McCarthy developed a mobile robot, Shakey, which mimicked "human decision-making" to conduct seminars. He also coined the term artificial intelligence (AI) to indicate its resonance with our human brain and cognitive intellect.

Today, different types of machine learning operationalization (MLOps) software and AI tools are being frontloaded across major industrial sectors to identify, automate, and optimize big data.

What are the types of artificial intelligence?

- Narrow AI (Weak AI): Performs specific tasks and cannot learn independently, like Siri.

- Artificial general intelligence (AGI): Mimics human-like learning and reasoning, seen in self-driving cars.

- Artificial superintelligence (ASI): Hypothetical AI that surpasses human intelligence in all aspects.

- Reactive machine AI: Responds to stimuli in real-time without memory, such as IBM's Deep Blue.

- Limited memory AI: Stores knowledge to learn and improve over time.

- Theory of mind AI: Understands and responds to human emotions.

- Self-aware AI: Recognizes emotions and possesses human-level intelligence.

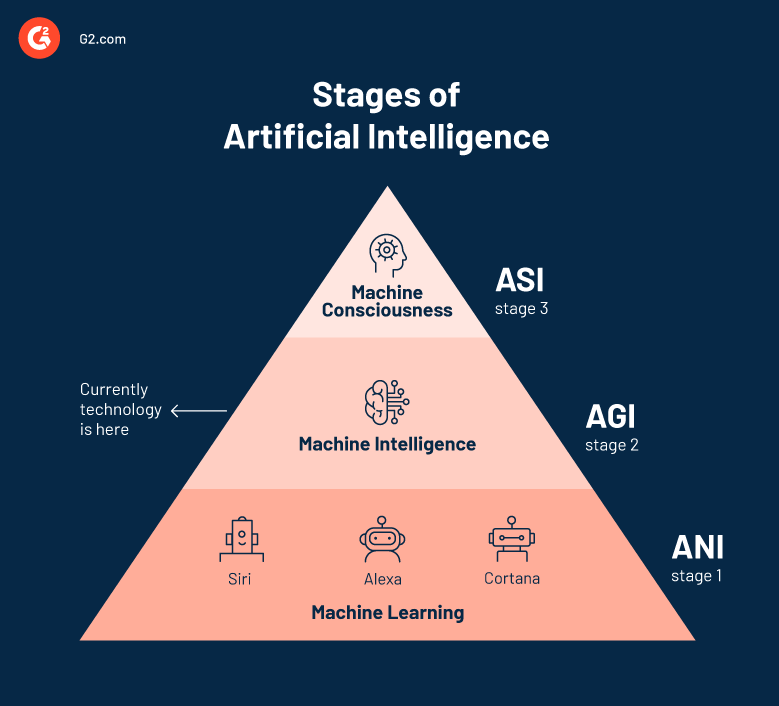

AI has come a long way from its history to its present-day form. At the root level, the AI tree includes machine learning, which spurts out to other advanced stages like machine intelligence, machine consciousness, and machine awareness.

Artificial narrow intelligence (ANI)

Narrow or weak AI is a kind of AI that prevents a computer from performing more than one operation at a time. It has a limited playing field when it comes to performing multiple intellectual tasks in the same time frame. Narrow AI can compile one particular instruction in a customized scenario. Some examples are Google Assistant, Alexa, and Siri.

Did you know? The AI market was valued at 51.08 billion in 2020 and is projected to climb up to USD 641.30 billion by 2028, at a CAGR of 36.1%

Source: Verified Market Research

Examples of narrow AI

Customer service chatbots, like those used by companies such as Zendesk or Drift, employ narrow AI to answer common customer inquiries, troubleshoot issues, and provide support, all within a limited context.

Applications like Google Photos and facial recognition systems leverage narrow AI to identify and categorize images, recognizing faces, objects, and scenes within photos.

Möchten Sie mehr über MLOps-Plattformen erfahren? Erkunden Sie MLOps-Plattformen Produkte.

Artificial general intelligence (AGI)

AGI is the future of digital technology, where self-assist robots or cyborgs will emulate human sensory movements. With AGI, machines will be able to see, respond to, and interpret external information, similar to the human nervous system. The advancements in artificial neural networks will drive future AGI loaders, which will run businesses with the passage of time.

Examples of AGI

As of now, true AGI does not exist, and all existing AI systems are classified as narrow AI. However, researchers are exploring theoretical examples and ongoing projects that aim to achieve AGI. For instance, models like OpenAI's GPT-4 and DeepMind's AlphaFold exhibit advanced capabilities in language processing and biological understanding, respectively, yet they remain limited to specific domains.

Initiatives in cognitive architectures and artificial consciousness research strive to replicate human cognitive processes, while general-purpose AI models envision systems capable of learning and performing any intellectual task akin to human abilities. These endeavors reflect the ongoing quest to develop AGI, which remains a significant goal in the field of artificial intelligence.

Artificial superintelligence (ASI)

Strong AI is a futuristic concept that has only been the premise of a sci-fi movie until now. Strong AI will be the ultimate dominator as it would enable machines to design self-improvements and outclass humanity. It would construct cognitive abilities, feelings, and emotions in machines better than us. Thankfully, as of now, it's just a proposition.

7 main branches of artificial intelligence across different sorts

1. Machine learning is the main branch of AI that enables machines to analyze, interpret, and process data from all angles to generate correct output.

2. Deep learning is a convolutional neural network consisting of different layers that extract and classify different components of data.

3. Natural language processing is a self-evolved technology for basic human-computer communication. It is mainly used to design conversational chatbots.

4. Robotic process automation deals with designing, constructing, and operating robots that impersonate human actions and converse with other humans.

5. Expert systems learn and imitate a human being's decisions using logical notations and conditional operators.

6. Fuzzy logic or hypothesis testing exhibits the degree of truth of an output. Say, if TRUE equals 0 and the output says 1, it is inferred that the null hypothesis is untrue.

7. Random forest algorithm is often known as an "ensemble" or "decision tree" as it combines different decision trees to measure output accuracy.

The second type, known as functional AI, describes major AI applications in the commercial sphere and social media. Type 2 systems mostly run on unsupervised algorithms that generate output without utilizing training data.

To date, four types of type-2 AI systems have been devised and tested.

Reactive machines AI

Reactive machines are the most basic type of unsupervised AI. This means that they cannot form memories or use past experiences to influence present-made decisions; they can only react to currently existing situations – hence “reactive.”

Reactive machines have no concept of the world and cannot function beyond the simple tasks for which they are programmed. A characteristic of reactive machines is that no matter the time or place, they will always behave the way they were programmed. There is no growth with reactive machines, only stagnation in recurring actions and behaviors.

Example of reactive machines

An existing form of a reactive machine is Deep Blue, a chess-playing supercomputer created by IBM in the mid-1980s.

Deep Blue was created to play chess against a human competitor with the intent to defeat the competitor. It was programmed to identify a chess board and its pieces while understanding and predicting the moves of each piece. In a series of matches played between 1996 and 1997, Deep Blue defeated Russian chess grandmaster Garry Kasparov 3½ to 2½ games, becoming the first computerized device to defeat a human opponent.

Source: Scientific American

Source: Scientific American

Deep Blue’s unique skill of accurately and successfully playing chess matches highlighted its reactive abilities. In the same vein, its reactive mind also indicates that it has no concept of the past or future; it only comprehends and acts on the presently-existing world and its components within it. To simplify, reactive machines are programmed for the here and now, but not the before and after.

Limited memory AI

Limited memory is comprised of supervised AI systems that derive knowledge from experimental data or real-life events. Unlike reactive machines, limited memory learns from the past by observing actions or data fed to it to create a good-fit model.

Although limited memory builds on observational data in conjunction with pre-programmed data the machines already contain, these sample pieces of information are fleeting. An existing form of limited memory is autonomous vehicles.

Examples of limited memory AI

Autonomous vehicles or self-driving cars work on a combination of observational knowledge and computer vision. To observe how to drive properly among human-dependent vehicles, self-driving cars segment their environment, detect patterns or changes in external factors, and adjust.

Note: Tesla's Autopilot cars are engineered with 40x more graphical processing power and advanced sensor technology, making it the future of driving.

Not only do self-driving vehicles observe their environment, but they also detect, label, and catch traffic in hindsight. Previously, driverless cars without limited memory AI took 100 seconds to react and make judgments on external factors. Since the introduction of limited memory, reaction time on machine-based observations has dropped sharply.

Virtual assistants use limited memory AI to provide personalized user experiences by remembering past interactions. These assistants analyze user preferences, such as frequently asked questions, preferred settings, or previous commands, to improve their responses and suggestions over time.

For instance, platforms like Amazon Alexa and Google Assistant can recall past requests, allowing them to offer tailored recommendations, manage smart home devices, and enhance user engagement. This memory of prior interactions helps virtual assistants adapt and respond more effectively, making them increasingly useful in daily tasks.

Theory of mind AI

As the name suggests, theory of mind is a technique for passing the baton of your ideas, decisions, and thought patterns to computers. While some machines currently exhibit humanlike capabilities, none are fully capable of holding conversations relative to human standards. Even the most advanced robot in the world lacks emotional intelligence (the ability to sound and behave like a human).

This future class of machine ability would include understanding that people have thoughts and emotions that affect behavioral output and thus influence a “theory of mind” machine’s thought process. Social interaction is a key facet of human interaction. So to make the theory of mind machines tangible, the AI systems that control the now-robots would have to identify, understand, retain, and remember emotional responses.

These machines can process human commands and adapt them to their learning centers to understand the rules of basic communication and interactions. Theory of mind is an advanced form of proposed artificial intelligence that would require machines to acknowledge rapid shifts in human emotional and behavioral patterns thoroughly. Harmonizing interactions at this level will require a lot of testing and abstract thinking.

Example of the theory of mind AI

Some elements of the theory of mind AI currently exist or have existed in the recent past. Two notable examples are the robots Kismet and Sophia, created in 2000 and 2016, respectively.

Kismet

Kismet, developed by Professor Cynthia Breazeal, was capable of recognizing human facial signals (emotions) and could replicate said emotions with its face, which was structured with human facial features: eyes, lips, ears, eyebrows, and eyelids.

Source: YouTube

Sophia

Sophia, on the other hand, is a humanoid bot created by Hanson Robotics. What distinguishes her from previous robots is her physical likeness to a human being as well as her ability to see (image recognition) and respond to interactions with appropriate facial expressions.

Source: YouTube

These two humanlike robots are samples of movement toward a full theory of mind AI systems materializing in the near future. While neither of them holds the ability to have a full-blown human conversation with an actual person, both robots have aspects of emotive ability akin to that of their human counterparts. This initiative has pushed the envelope of AI toward human society.

Self-awareness AI

Self-aware AI involves machines with human-level consciousness. Although this form of AI is not currently in existence, it would be considered the most advanced form of artificial intelligence known to man.

Facets of self-aware AI include the ability to not only recognize and replicate humanlike actions but also to think for itself, have desires, and understand its feelings. Self-aware AI, in essence, is an advancement and extension of the theory of mind AI. Where the theory of mind only focuses on the aspects of comprehension and replication of human practices, self-aware AI takes it a step further by implying that it can and will have self-guided thoughts and reactions.

Cross that bridge when you come to it

We are presently in tier three of the four types of artificial intelligence, so believing that we could potentially reach the fourth (and final?) tier of AI doesn’t seem like a far-fetched idea.

But for now, it’s important to focus on perfecting all aspects of types two and three in AI. Sloppily speeding through each AI tier could be detrimental to the future of artificial intelligence for generations to come.

Explore how logistic regression splits a difference between different kinds of unstructured data.

This article was originally published in 2022. It has been updated with new information.

Rebecca Reynoso

Rebecca Reynoso is the former Sr. Editor and Guest Post Program Manager at G2. She holds two degrees in English, a BA from the University of Illinois-Chicago and an MA from DePaul University. Prior to working in tech, Rebecca taught English composition at a few colleges and universities in Chicago. Outside of G2, Rebecca freelance edits sales blogs and writes tech content. She has been editing professionally since 2013 and is a member of the American Copy Editors Society (ACES).