Every established company requires healthy servers to operate in today’s complex IT environment. Important IT assets such as applications, databases, and networks are all connected and hosted by servers. If servers fail, so do the connected IT assets.

Most organizations have encountered disruptions in server performance, which lead to customer dissatisfaction and revenue loss. To avoid such situations, companies are now opting for server monitoring software, a tool that ensures servers, both physical and virtual, are performing at the given service-level agreement (SLA).

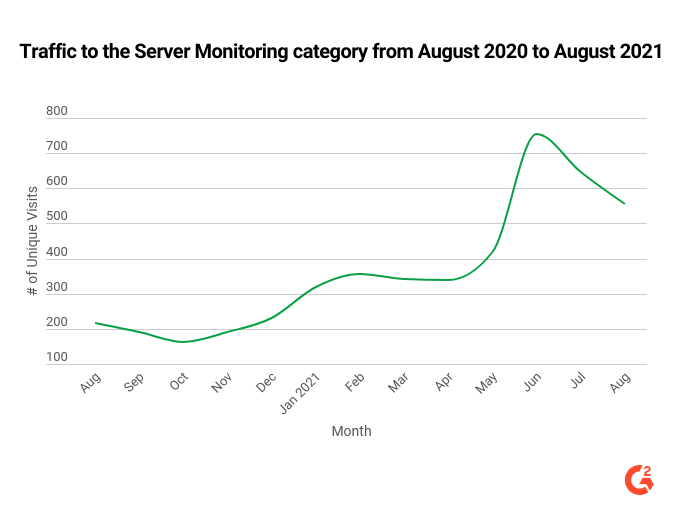

G2 sees rising interest in server monitoring software

G2 data highlights a 157% increase in traffic to G2’s Server Monitoring software category from August 2020 to August 2021. This shows that server monitoring tools are in increasing demand because companies need to ensure their server performance meets their needs.

Purchasing a server monitoring solution can have a long return on investment (ROI) period, which is undesirable. According to the G2 Grid® Report for Server Monitoring Fall 2021, the ROI for server monitoring software is 25 months compared to 15 months for application performance monitoring (APM) software. APM is comparable to server monitoring as it focuses on monitoring software and application performance.

When buying a server monitoring product, detailed research is necessary. In this article, I will introduce the fundamentals of server monitoring and emphasize some best practices so companies can make the best use of this technology.

Understanding servers: diving into the fundamentals

Before we jump into what server monitoring is, let’s take a look at the basics.

What is a Server?

A server is hardware or software that provides resources or services to other systems and applications. There are many types of servers—web servers, application servers, email servers, internet servers, and so on. They can all be categorized into two types of servers: software and hardware.

Software vs. hardware server

- Software server: A software server is an application that provides services for clients, such as storing and transferring data, relocating files, translating different computer languages, connecting to the internet, allocating resources, and so on. For example, an email server can store and transfer mail files for email clients. By setting up the connection between user devices and email servers, users can access their emails without installing an email hosting infrastructure on their devices. The software server can be installed anywhere—hardware servers, virtual servers, or in the cloud.

- Hardware server: A hardware server is a physical device with an operating system that the software server runs on. Since it hosts all the software, the hardware server is also called the host. They can be workstations, server racks, mainframes, and so on. But no matter the size and type, all hardware servers are connected to a network with software that provides services to other systems.

To ensure desirable server performance, companies need to monitor both the hardware and the software server. If the hardware malfunctions, the software lacks the resources to provide proper service. If the company only monitors the software server, then the IT admin may mistakenly look for a problem in the software and won’t find any.

The same logic applies to monitoring the hardware. Much enterprise-grade hardware comes with a basic status report on its temperature, resource usage, and so on. This is not enough anymore. For example, if the hardware overheats, this would cause bottlenecks in the server performance or even damage the data. The reasons for overheating may be that the hardware is aging or is poorly installed. However, overheating can also be caused by malfunctioning software taking up too many resources. Unless IT admins are monitoring the software server, they won’t know. So if they just replaced the hardware servers, the malfunctioning software could still cause the same overheating issue in the future.

| TIP: An important issue is to identify what needs to be monitored to ensure that the server runs, but the bigger issue is to know how the server interacts with other infrastructure or services—because the applications depend on both. The right monitoring tool must have insights into server hosts (hardware) and server logs (software) for all the dependent infrastructure and protocols. |

The systemic way to perfecting IT infrastructure

Server monitoring systemically tracks and improves the performance of servers. Since servers connect to every application, service, and system, server monitoring helps to ensure efficient performance of the server and the client.

Every server is different because it is specifically adapted to the client. In addition to basic server metrics such as CPU utilization, memory usage, storage capacity, and network bandwidth, advanced metrics that are dedicated to client applications, services, and systems also need to be tracked to ensure high-performance efficiency. This can be complicated because the metrics change based on the server type.

For example, a web server is different from an operating system server. For web servers, common metrics center around data requests, response times, data packets, and so on. These data indicate a variety of potential problems such as insufficient bandwidth allocation and poor web design. But for operating system servers like Linux, metrics would focus not only on CPU utilization and memory but also on swap, which is a unique feature of Linux. Swap space is used when the physical memory or RAM is full, so in order to track full RAM usage, IT administrators need to track both memory and swap in the Linux system.

Monitoring physical and virtual servers

Another key factor to be considered when purchasing server monitoring software is the platform on which they are implemented: on-premises vs. cloud.

- On-premises monitoring tools are either installed on a separate server on top of the client or connected to the whole network. This is an old technology that isn’t sufficient anymore for many cloud-based clients. However, on-premises solutions give high customizability and data control on site.

- In contrast to on-premises tools, there is an increase in SaaS server monitoring tools that are configured and managed by the vendors. They are flexible in contracts and easy to install but lack deep customization. However, most companies opt for these tools because of the cloud environments.

As described in my article on APM solutions, microservices, Dockers, Kubernetes, and other cloud services are built much differently and are complicated compared to traditional software. There is no obvious right and wrong choice here, and companies need to go for tools that best fit their existing infrastructure.

To make this decision relatively easier, G2 provides real reviews by server monitoring software users, with a detailed buyer’s guide to help businesses navigate the purchasing process better.

Best practices for using server monitoring software

Even though there are many types of servers and clients, there are some good practices companies can follow to ensure better server performance and functionality.

- Setting the right metrics for comparison: Any metric is useless without a baseline. Setting up the correct baseline will facilitate automatic alerts from the system when different types of issues occur. Basic baselines are easy to set since many vendors offer comparison metrics. However, some long-term baselines need correlations to account for regular system updates, seasonal traffic changes, and so on. For starters, users should plan the tracking metrics carefully and tweak them regularly until the monitoring system provides accurate alerts instead of false alarms.

- Prioritizing server optimization based on business value: For companies with a huge IT infrastructure, there usually aren’t enough IT administrators to optimize every single server. Therefore, such companies need to prioritize servers that bring the most value by identifying how much revenue and how many customers they may lose if this server fails for a certain period of time. This gives IT administrators a direction as to which server to optimize first. Some vendors offer this type of feature for specific servers, but it is still up to the companies to analyze their whole infrastructure to determine the priority.

- Analyzing data trends for capacity planning: Outside of monitoring and fixing servers, IT managers can make use of the trends in data to analyze when to purchase new servers and resources. For example, a web server requires higher RAM and bandwidth if the unique traffic is growing at a particular speed. Instead of waiting for the website to overload and lag, IT managers can account for traffic visits based on historical trends.

Server monitoring is part of the observability trend

Many IT performance issues are not just from the servers, but the clients themselves. Companies would also need to provide their clients with specific monitoring tools. The end goal is to monitor every IT asset so the IT team can correctly fix every issue.

Servers are connected to everything, which makes server monitoring a great start to the observability journey that would provide the monitoring of all IT infrastructure, applications, and services.

Möchten Sie mehr über Netzwerküberwachungssoftware erfahren? Erkunden Sie Netzwerküberwachung Produkte.

Tian Lin

Tian is a research analyst at G2 for Cloud Infrastructure and IT Management software. He comes from a traditional market research background from other tech companies. Combining industry knowledge and G2 data, Tian guides customers through volatile technology markets based on their needs and goals.