Consumers like to conveniently access data with devices they love.

They appreciate self-service systems that apply proper governance and security over data while allowing them to access and modify it through a single entry point. They are often reluctant to reach out to the IT department that governs particular data types, as it can be time-consuming.

Modern businesses store various datasets like big data, social, web, or IoT device data. Data virtualization enables end-users to access and modify data stored across siloed, disparate systems through a single customer view. It helps customers bridge critical decision-making data together, fueling analytics and assisting businesses to make strategic and informed decisions.

What is data virtualization?

Data virtualization is an approach to data management that creates a logical extraction layer. It allows users to access and modify diverse data without worrying about technical details, like how the data is formatted at the source or where it’s stored.

Data virtualization enables users to access all data through a single view. Instead of moving huge blocks of information, data virtualization uses pointers to those blocks that require a smaller storage footprint and provide high-performance access to stored data.

Data virtualization doesn’t replicate data or store it anywhere. It helps a user to connect to required data and delivers it in real-time. It also allows businesses to apply a range of analytics like predictive, visual, and streaming on the most recent data updates. Not only does it help companies centralize security and governance over siloed data, it also allows them to deliver data in a way consumers can use it.

With the large amount of data that companies are collecting in different aspects and formats, it gets trickier to manage. Some companies have data warehouses to store the multitude of information they have acquired. But storing unstructured data coming from social, web, or IoT devices becomes a complicated task.

Data virtualization software provides a solution to access all of this data in a way your end users love. As consumer applications evolve, data virtualization enables businesses to follow an agile approach to data management.

Why do you need to virtualize data?

In this competitive business environment where data demands are rising at the same rate as the amount of data you store, it’s crucial to manage it properly and leverage it when needed. With organizations accumulating multiple types of data, the task of managing it has gone beyond the capabilities of traditional data integration like Extract Transform Load (ETL) systems or data warehouse software.

Your agility governs how well you can adapt to evolving market trends in a fast-paced business environment. Data virtualization enables businesses to quickly access and use production-quality data, helping them be agile with their development, testing, production, and release cycles.

Data visualization helps you grow beyond the legacy ticketing system and doesn’t require you to approach a database administrator for your needs. Traditionally, IT enterprises relied on the request-fulfill model, where developers and testers wait in a queue since preparing a test data copy was time-consuming.

This added redundancies in the application development lifecycle and slowed down the process. Since it took a lot of time to update or refresh test data, development or QA teams were bound to work with stale data, creating data-related errors in the production environment.

Data virtualization helps businesses to eliminate redundancies while delivering better business results. It helps your business be more cost-effective and time efficient by providing a single view of well-engineered data that you can access, modify, and manage.

Apart from the above factors, there are many capabilities of data virtualization that make it a must-have for business.

Data virtualization capabilities include:

- Profitability: Data virtualization provides seamless access to lengths and breadths of an organization’s data, enabling businesses to make informed and profitable decisions.

- Risk reduction: Data virtualization’s up-to-the-minute information helps businesses to mitigate the risks related to compliance penalties. It also saves development time with fast iteration, minimizing a project’s risk.

- Efficiency: Data virtualization enhances the utilization of server and storage resources. It doesn’t replicate data, saving more on governance and hardware.

- Time-to-solution acceleration: Data virtualization projects are completed faster and benefit the business more quickly. This is also a benefit of lower project costs.

- Productivity: Data virtualization is easy to use and enables data engineering teams to do more in less time.

- Scalability: Data virtualization provisions lightweight database copies in minutes through a user interface or API, enabling you to scale agile development.

- Data governance: Data virtualization implements access controls over what data should be accessible to whom, making it a beneficial security asset.

Möchten Sie mehr über Datenvirtualisierungssoftware erfahren? Erkunden Sie Datenvirtualisierung Produkte.

How does data virtualization work?

Data virtualization allows businesses to quickly access the data they need. First, you need to choose a data virtualization middleware for your enterprise that is easy to use and scalable across your on-premise, cloud, or hybrid infrastructure. Data virtualization software will enable your data engineering team to design clean and concise data views using rich analytics, design, and development features.

Next up, your data analytics users can find the business views they require through data catalogs or application programming interfaces (API) management systems. Whenever the users run a report or refresh a dashboard, data virtualization accesses information in real-time, makes transformations, and delivers it to the user.

Moreover, its security and governance functions help ensure that businesses meet their service, security, and privacy service level agreements (SLAs) and comply with industry regulations.

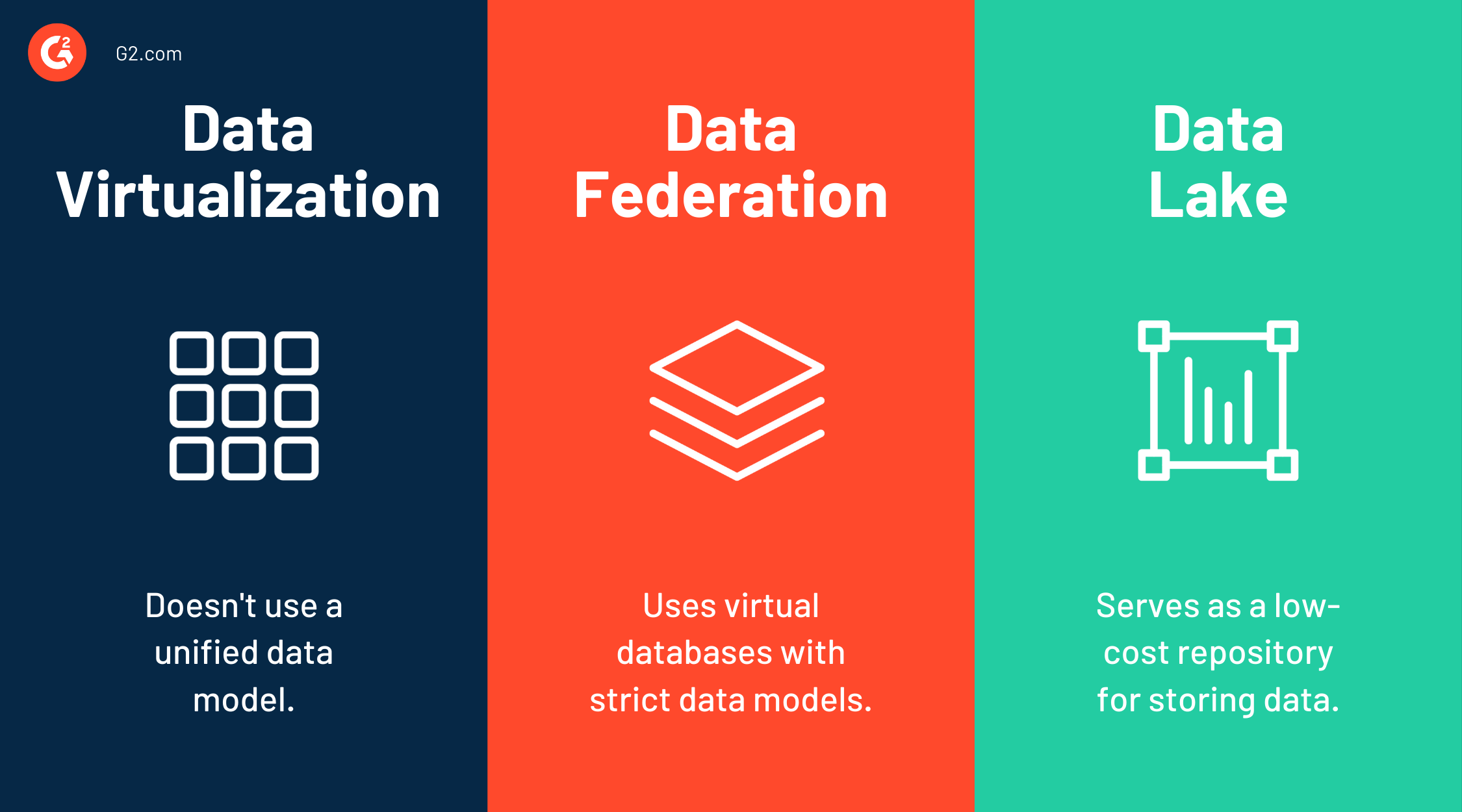

Data virtualization vs. data federation vs. data lake

Data virtualization and data federation are sometimes used interchangeably. Data federation is a type of data virtualization. Both of them integrate data and simplify access for front-end applications.

Data federation is an approach that uses virtual databases with strict data models. It enables users to access distributed data types and models through a single interface and allows multiple databases to function as one. The virtual database takes data from diverse sources and converts it into a common model.

Data lakes serve as low-cost repositories for storing huge amounts of structured or unstructured data. It’s the preferred choice of big development teams who work with open-source tools and need a cost-effective data analytics sandbox.

On the contrary, data virtualization provides an interface to access different data models without following any strict data model. It integrates all enterprise data siloed across disparate systems, implements centralized security and governance with unified data, and delivers it to users in real-time.

Data virtualization use cases

Data virtualization involves introducing a layer between disparate data sources and consumers. It has multiple use cases in the industry.

Data integration

Data integration is perhaps the most common use case of data virtualization. Many businesses work with diverse, disparate data sources like big data, cloud data, and social media.

Since these data types are in different formats, data virtualization makes it easier for consumers to connect with a particular type they need without worrying about its format or storage location.

DevOps

In application development processes, teams majorly automate everything except data to transform app-driven customer experiences. Data virtualization helps such teams to connect, access, and use production-quality data seamlessly.

It helps DevOps teams eliminate the bottlenecks in data provisioning and reduce the resources required to compute and create copies of data for developers and testers.

ERP upgrades

Most enterprise resource planning projects are stalled due to the slow and complex process of refreshing project environments. Data virtualization tools can help ERP teams operate more efficiently than legacy processes by cutting complexities, lowering total cost of ownership (TCO), and accelerating projects by providing virtual copies of data.

Analytics, reporting, and backup

For business intelligence projects that demand integration, data virtualization can provide on-demand data access. Virtual data copies can facilitate a sandbox for destructive query and report design.

When your teams encounter a production issue, they can identify the exact cause with the ability to provision virtual data environments. It also helps in validating that any modifications don't cause unforeseen problems.

Big data and predictive analytics

Big data and predictive analytics are built on data coming from heterogeneous sources. It’s not as simple as drawing data from a database. Big data comes from diverse sources such as social media, cell phones, email, and other origins.

Data virtualization makes it easier for a user to access diverse datasets from a single platform and use it to conduct analytics.

Top 5 data virtualization software

Data virtualization software enables organizations to adapt to agile data storage, retrieval, and integration processes by using virtual data layers.

To qualify for inclusion in the data virtualization software list, a product must:

- Use a virtualized layer to abstract data

- Enable data integration between data from disparate sources

- Allow data retrieval and manipulation

*Below are the five leading data virtualization software from G2's Summer 2021 Grid® Report. Some reviews may be edited for clarity.

1. SAP HANA

SAP HANA offers data virtualization solutions that help users to perform operations on data in real-time. It provides a single platform for all processes, ultimately reducing the hardware costs, manual efforts, and time.

What users like:

“I have been using SAP HANA in my office for the last two years. As an automation developer, I love its feature to record scripts in text format where it fetches the field ID, table ID, and window IDs and lets me use it in my VB Script, UiPath Automation, Macros.

Different environments like Q40, S40, D40, PRD help me handle both production and testing. Whenever we face any problem in production, my Q40 connection allows me to create a replica of this and handle it. Also, I love the T-code functionalities: FB70, FB60, BP, F-28, F-30, MIRO are my personal favorites.”

- SAP HANA Review, Debasis N.

What users dislike:

“There are many licensing options available for different use cases, but most small businesses still can't afford to use SAP HANA because it is very costly.

It does not work with any OS other than Linux Environment. Also, the documentation describing the functionality of SAP HANA should be improved and readily provided. It consumes a lot of RAM and CPU power, resulting in lags and crashes on the user's device.”

- SAP HANA Review, Dr. Ravindra P.

2. PowerCenter

Informatica PowerCenter provides an end-to-end data integration platform that includes capabilities to integrate raw, fragmented data from disparate sources. It helps businesses transform raw data into complete, high-quality, business-ready information.

What users like:

“Informatica PowerCenter is an innovative software that works with ETL-type data integration. This powerful tool facilitates data migration and the integration of different databases such as SQL Server and Oracle. All of this under one intuitive and simple interface. Its support system is very efficient and solves errors that occur in a matter of minutes.”

- PowerCenter Review, Leah S.

What users dislike:

“User interface can be improved. They can work on the visualization part to make it more user-friendly. One more aspect I want to include is that when I work on it, sometimes data gets lost for some time due to synchronization issues that can be resolved.”

- PowerCenter Review, Soumyadip R.

3. Denodo

Denodo offers enterprise-grade data virtualization with an easy-to-use interface that helps businesses conduct complex business operations, including supplier management, regulatory compliance, data-as-a-service, systems modernization, and more.

What users like:

“Denodo is easy to use and allows me to develop a REST web service in less than 30 mins without much code, and I often receive good feedback from customers. They can proceed with their testing and roll it out to production in the same day or within a couple of days!”

- Denodo Review, Chevon T.

What users dislike:

“When handling huge data, we have seen some performance issues, but that’s not a major constraint as we don’t process 5-10 years of historical load daily.”

- Denodo Review, Bibhu D.

4. AWS Glue

AWS Glue is a serverless data integration service that makes it easy to discover, prepare, and combine data for analytics, machine learning, and application development. AWS Glue provides all of the capabilities needed for data integration so you can start analyzing your data and putting it to use in minutes instead of months.

What users like:

“The most useful thing about AWS Glue is to convert the data into parquet format from the raw data format, which is not present with other ETL tools. It can convert a huge amount of data into parquet format and retrieve it as required.”

- AWS Glue Review, Anudeep M.

What users dislike:

“It can be expensive depending on the usage and what you plan on doing with it.”

- AWS Glue Review, Danny S.

5. Oracle Virtualization

Oracle VM is designed for efficiency and optimized for performance to support a variety of Linux, Windows, and Oracle Solaris workloads. The virtualization software is supported by a long list of partners in every industry.

What users like:

“Oracle Virtualization has been my favorite tool for managing, editing, and creating virtual machines for a long time. The interface is intuitive, and it is possible to configure the resources available for each virtual machine in detail. I never had any severe problems running the virtual machines. It’s an excellent tool to test features and configurations before applying them to physical devices.

The software has exciting features, such as the possibility of virtual machines recognizing real connected peripherals, in addition to graphical configurations. It’s a fundamental tool for any IT professional.”

- Oracle Virtualization Review, Rafael C.

What users dislike:

“The export feature is not good and was pretty confusing at first. I was confused about the supported file type.

The documentation is quite lengthy on the website. I referred to many youtube videos about using its features.”

- Oracle Virtualization Review, Niyati M.

Make a wise choice

Data virtualization is a fantastic solution when it comes to working with data stored in disparate systems. It makes a good business case when you need business-friendly and well-engineered data views for your users. As clients’ requirements are evolving, IT can quickly deliver and iterate a new data set through data virtualization.

When you require up-to-the-minute information or need to federate data from multiple sources, data virtualization can help you connect with it quickly and serve it fresh every time.

But data virtualization isn’t an answer to every data analytics requirement. Depending on the use case, sometimes a consolidated data warehouse with an ETL is a better solution - or even a hybrid of both.

If data warehouses better serve your purpose, discover the best data warehouse software to process, transform, and ingest data to fuel your decision making.

Sagar Joshi

Sagar Joshi is a former content marketing specialist at G2 in India. He is an engineer with a keen interest in data analytics and cybersecurity. He writes about topics related to them. You can find him reading books, learning a new language, or playing pool in his free time.