Building voice-enabled systems undergoes many testing stages.

Businesses around the world are working on powering their systems with conversational abilities to create a friendly user experience. But programming these instructions can get a little tricky. This is why the systems end up being unresponsive, incomprehensible, and lagging.

If your product pertains to a specific region, it needs to be trained on an exclusive set of regional dialects. It needs to comprehend the complexity of human dictation, derive specific conversation patterns, and act fast. Users expect voice assistants to respond to their queries and understand the context behind them. Switching to NLP-based voice recognition software or data labeling software can categorize audio data efficiently and build responsive voice recognition assistants.

Let's look at how voice recognition is shaping up the tech industry today and its acceptance, architecture, and major applications.

What is voice recognition?

Voice recognition, also known as speech recognition, focuses on converting human instructions and unbreakable sentences into live actions. These tools offer either a console or web-based app interface where users can log on, dictate commands and perform specific actions. Some voice recognition systems are also used for robotic assistance within airports, banks, and hospitals.

Some famous examples of voice recognition assistants are Apple’s Siri, Microsoft’s Cortana, Google Home, and Amazon’s Echo and Amazon's Alexa.

While modern-day computers are more proficient in recognizing speech, the technology has roots in the early 1970s. Let’s look at the journey of how computers became our personal walkie-talkies.

History of voice recognition

The first ever voice recognition system was designed by Bell Laboratories in 1952. Known as the Audrey System, this device could understand 9 digits spoken by a single person.

Ten years later, IBM came out with Shoebox, an experimental device that could perform mathematical functions and process up to 16 words in English. By the end of the 1960s, most companies added hardware components like internal transistors and microphones to computers.

In the 1970s and 1980s, tech companies went further into studying speech and sound data. They added more skin to their digital databases in the form of newer words. The US Department of Defense and Defense Advanced Research Projects Agency (DARPA) also launched the Speech Understanding Research (SUR) Program. This program gave birth to the Harpy speech system, which was capable of understanding 1000 words.

In the 1990 and 2000s, speech recognition propelled forward as the use of personal computers (PC) grew. Several applications like Dragon Dictate, PlainTalk, and Via Voice by IBM were launched. These applications were able to process nearly 80% of human speech and helped users with data processing and application navigation on desktops.

By 2009, Google launched Google Voice for iOS devices. Three years later, Siri was born. As the user base of the voice market grew, Google began including voice search for its engine and web browsers like Google Chrome. Now, Google Voice operates for iOS 13 and above.

Some of the most popular companies that provide accurate voice recognition are

More people are now comfortable interacting vocally with machines. While some use it to transcribe documents, others set their home automation systems on it.

Home devices can be controlled solely through speech control. You can lock your car doors from a distance or switch off your electronics with a simple command. If you have a baby sleeping in the next room, you can instruct Alexa to keep an eye on her moves while you are away.

But how did this technology get to where it is today? There’s a simple working mechanism to it.

Want to learn more about Voice Recognition Software? Explore Voice Recognition products.

How does voice recognition work?

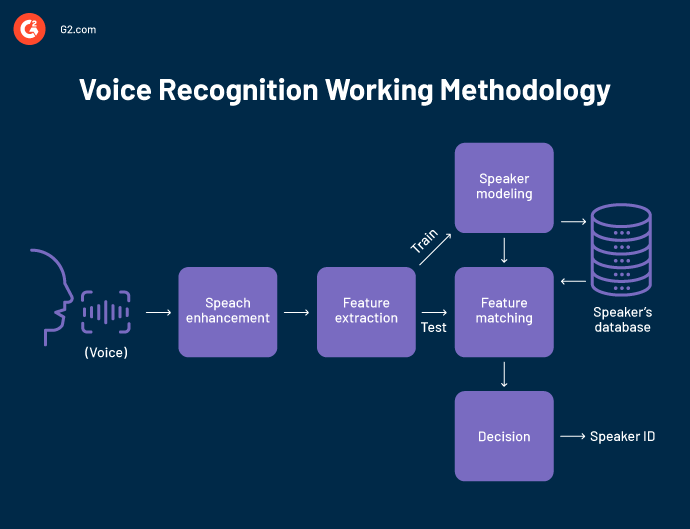

The voice recognition system detects voice and extracts analog signals (the words we speak) into digital signals (that computers interpret).

This is done with the help of an analog-to-digital (A/D) converter. As you speak, the audio waves are enhanced and converted into digital signals. The features of words are then extracted and stored in a digital database. Before displaying the output, the words are compared against the A/D converter.

The database consists of vocabulary, phonetics, and syllables. It’s stored in your computer’s random access memory (RAM) and runs whenever input is registered. Once the RAM finds the match, it loads the database into its memory and types the output. So whenever you speak on an external or internal microphone, your words appear as text on the screen.

You need large RAM and a large dataset to ensure the process remains smooth. The capacity of your RAM is directly related to the effectiveness of a voice recognition program. If the entire database can be loaded into RAM in one go, the output will be processed faster.

Besides saving time and resources, voice recognition also gives us more options for expressing ourselves, as some of us are a lot better at verbal speech than writing.

- Your words are repeated by voice recognition software for feature extraction

- The repeated words are stored as speech samples, and multiple samples' statistical averages are computed.

- The average samples are used to train the voice recognition system.

- The system’s response is personalized to the speaker’s input.

Types of voice recognition

We use voice recognition in smart speakers, mobile devices, desktops, and laptops. On all these devices, you can set a talk-back feature that reads your screen and vocalizes your words. This cuts your screen time and gives you master control of your device. What are other kinds of voice recognition systems being used nowadays?

-

Speaker-dependent system has to be trained on several words and phrases before use.

-

The speaker-independent system, also known as voice recognition software, recognizes a person’s voice without training.

-

Discrete speech recognition requires the user to pause between words so the computer can interpret the voice.

- Continuous speech recognition understands the normal flow of speech, just like the voice typing feature of Google Docs.

- Natural text-to-speech doesn’t just understand a human voice but can also respond to the queries being asked. Natural language processing (NLP) or conversational artificial intelligence (AI) is used to create these systems.

A customized voice recognition system on your computer can allow you to manage tasks like

-

Formatting and saving text

-

Browsing the internet

-

Downloading images

-

Printing and sending documents

-

Writing proposals and project briefs.

-

Completing online application forms

-

Responding to voice commands

-

Answering queries through online searches

-

Making phone or Zoom calls

-

Adding or deleting a contact

- Setting appointment reminders and notifications

Major features of voice recognition

Many voice recognition software runs on neural networks, which makes them time and cost-efficient. Neural networks work on large computational datasets that process voice quickly.

The neural networks are equipped with the following features:

-

Language weighting. You can improve your precision by weighting some frequently used words. The system can pre-populate these words as it gets to know your speech pattern.

-

Speaker labeling. Based on your phonetics and tone of voice, the system can categorize which speaker is interacting with it.

- Acoustics training. The system can be trained to understand and adapt to the background noise of a business environment.

- Profanity filtering. Filter out expletives or unwanted phrases to sanitize output.

Did you know? The global speech and voice recognition market size is projected to grow from USD 9.4 billion in 2022 to USD 28.1 billion by 2027, at a CAGR of 24.4%.

Source: Markets and Markets

Applications of voice recognition across industries

Voice recognition has made a small space for itself inside every home. From playing your favorite music to browsing the internet to drawing the curtains, digital assistants have become our friends.

Outside of personal interests, we use voice-based tools for many professional reasons. The ever-evolving aspect of voice technology can be reflected in the following industries.

- Healthcare: Using voice recognition, healthcare providers, such as nurses or doctors, can dictate notes to their computers without ignoring patient care.

- Banking, financial services, and insurance: Banks and insurance companies often struggle with their customer service. A junior employee and a branch manager repeat the same instructions to the customer. To solve this, frequent queries on opening a bank account or applying for a credit card are automated in real-time with voice control.

- Recruitment chatbots: Employees are becoming more and more comfortable conversing with chatbots. Whether they are appearing for a performance evaluation, a promotion, a job posting, or even an interview, a chatbot can engage with them and make the job of your HR teams easier.

- Advertising: Many brands use users’ speech data to create a go-to-market strategy. For example, if you voice search the web for “online dance classes,” some relevant dance agencies may make it to your inbox. Not only does your browser save the query, but it also stores the search pattern, accent, and location. Companies can access this data to pitch their services.

- E-commerce: We no longer need to scoot over to adjust the lights in the middle of our favorite movie. Digital assistants like Alexa or Google Home do all the lifting for us. We can also buy music, shop online, play games, and listen to audiobooks.

- Aviation: Before an aircraft takes off, the pilots must go through a long checklist of engine requirements. Sometimes, they miss out on important steps on the list. With speech-to-text in the cockpit, pilots can listen to the checklist and ensure everything is in place before take-off.

-

Corporate: In the corporate industry, voice recognition promotes employee diversity, empathy, and inclusion. It provides a comfortable, ergonomic alternative to the traditional forms of working. Emails and documents can be transcribed without typing on a keyboard. Employees can set voice typing on their documents and express their ideas without dealing with inner critics.

You can also create the minutes of any meeting or pre-recorded meeting clips in seconds. Any absent workers can catch up with the trail of old communication. Overall, it creates a more forgiving and empathetic workspace.

Did you know? The Royal Bank of Canada lets users pay bills through voice commands on bank applications. Also, the United Service Automobile Association (USAA), which is a financial services group, offers access to members’ account information through digital assistants like Alexa.

Source: Summa Linguae

Voice recognition process on desktop

After understanding the essence of voice recognition, let’s learn about various hardware and software requirements to run this program on your desktop.

Before you activate the voice feature, plug in your external microphone and headset through a USB socket. Turn your internal microphone on if you’re not using an external headset. Now you’re ready to look at different ways of activating voice recognition technology on different types of operating systems.

Microsoft Windows 11 (Windows Speech Recognition)

The steps for setting up a microphone for Windows 11 and earlier versions of Microsoft Windows are somewhat similar.

-

Select Start > Settings > Time and Language > Speech

-

Under the Microphone, click on Get started.

-

When the speech wizard window opens, the voice typing starts automatically. If the speech wizard detects microphone issues while running, you see a prompt on the screen. You can select options from it to solve the issue.

- Press the Windows logo key + Ctrl + S. The speech recognition setup will open.

-

Read through the instructions and select Next. Finish the setup.

If you have already set this up, this keyword shortcut will re-open the speech wizard. - Go to the control panel. Select Ease of Access > Speech Recognition > Train your computer to understand you better.

Microsoft Office 365

You can use the dictate command in Microsoft Word and Powerpoint to narrate your content. This command lets you convert your speech into text with a mic and a reliable internet connection. You can print your thoughts directly and create articles or quick notes.

However, you have to speak out the punctuation marks. The system can’t decipher them.

- Sign in to your Microsoft account with a mic-enabled device.

- Open a new or existing document and select Home > Dictate.

- Wait for the dictate button to turn on, indicating the program is ready to listen to you.

- Start speaking, and you’ll see your words appear on the screen.

Mac OS Dictation

In the macOS Ventura, you can dictate text in several ways. For online internet browsing, you can use Siri. If you want to dictate text and control your Mac using your voice, go through this process:

- On your Mac, choose Apple Menu > System Preferences > Keyboard

- In the Keyboard Window, choose the last option: Dictation

- Click On. A prompt will appear, telling you to Enable dictation. Select this option.

- Click the Language pop-up menu to dictate using another language, then choose a language or dialect. You can either choose a custom language and add a language or select from the existing list.

- To remove the language, click the language pop-up menu. Choose to customize and then deselect the language.

95.95%

is the accuracy rate of Google Speech Cloud Application Programming Interface (API).

Source: SerpApi

Google's voice access

Google has been in the voice recognition space for over a decade. With its specific products like Google App Keep, Google Voice search, and Google Home, Google has been able to store 230 billion words. The machine learning speech model Google uses to recognize and convert human speech works at a mind-boggling speed.

Voice recognition in mobile:

iPhones and Ipads. Siri is a virtual assistant that attends to your needs on Iphones or Ipads. Whether you want to call someone, set alarms, or lock your phone, Siri’s there for you.

Top voice recognition software in 2024

Voice recognition software is used to convert our words into computerized text using speech-to-text. It can be used in a car system, at commercial businesses, or for disabled people. Companies use this software for interactive voice response (IVR) to automate consumer queries. It’s also used to cross-check business IDs.

To be included in this category, the software must:

- Include machine learning algorithms that interpret various languages.

- Have a digital database of vocabulary.

- Edit and convert audio and video files.

- Train language models on user input.

- Capture content through handheld mics, external microphones, and mobile phones.

*Below are the five leading voice recognition software tools from G2's Winter 2023 Grid® Report. Some reviews may have been edited for clarity.

1. Google Cloud Speech-to-Text

Google Cloud Speech-to-Text is a cloud-based speech recognition API platform that enables you to transcribe over 73 languages into a human-readable format and generate automated responses that are accurate, quick, and contextual. This tool has been consistently ranking as a leader in the voice recognition category and is being used for device-based speech recognition.

What users like best?

Google Cloud Speech-to-Text is extremely easy to use. It can easily be integrated to work with any meeting or speech session. The speed with which it generates text is almost real time. Due to it's speed, content creation becomes superfast saving a lot of time of the user. An important feature that I observed in Google Speech-to-Text is that it automatically punctuates sentences based on the understanding of NLP.

- Google Cloud Speech-to-Text Review, Varad V.

What users dislike?

Along with some good features it has some drawbacks as well like it requires internet connection meaning it does not work offline. Also, we are not sure about privancy that how google server is handling user's data and how they are using it to improve it's features. Sometimes I feel latency in when real time transcription requires which needs to be improved.

- Google Cloud Speech-to-Text Review, Varad V.

2. Deepgram

Deepgram is the first ever AI-based transcription software for human-computer interaction. Whether the source is high-fidelity, single-speaker dictation, or cluttered, crowded lectures, Deepgram delivers accurate results.

What do users like best?

“The most impressive thing about their transcription service is the speed. We've tried many transcription services, and Deepgram blew us away with speed and accuracy. With their highly competitive prices compared to the big guys, it's a no-brainer.”

- Deepgram Review, Andrei T.

What do users dislike?

“Service can be unreliable when you need it the most. There are times when transcription response times are over 5 minutes.”

- Deepgram Review, Dhonn L.

3. Whisper

Whisper is a general speech to text tool that is trained on strong NLP algorithms to break down voice instructions and convert them into tangible actions. Whisper works with diverse forms of audio, studio data, spatial data and sonics to understand multilingual human commands and break down the sentiments behind those commands.

What do users like best?

"Whisper impresses with its seamless user interface, ensuring effortless communication. Implementing it is straightforward, although a bit of initial guidance would enhance the onboarding experience. Customer support is reliable but occasionally faces delays. Its frequent use highlights its practicality, while a rich set of features caters to diverse communication needs. Integration into existing workflows is smooth, contributing to its overall appeal."

-Whisper Review, Shashi P.

What do users dislike?

"The main dislike point is, if we have long form transcription then model failed to transcribe completely in once , because it's design in such way that, it's takes only 30s audio file."

- Whisper Review, Dhonn L.

4. Krisp

Krisp gives you the power to communicate clearly and confidently with your employees, peers, clients, or consumers. It is an AI-based speech automation solution that enhances your interpretation skills and helps you create documents.

What do users like best?

“I cannot believe the amazing capability of Krisp to differentiate between my voice and completely cancel out the background noises. Now that so many people are working from home, we've gotten used to people apologizing for dogs or kids or other noises. But with Krisp, I have had my dogs barking right next to me, and the other people on my video calls can't hear the dogs at all – but they can still hear me perfectly!”

Krisp Review, Crystal D.

What do users dislike?

“The 90 minutes a day for the free tier gets you pretty far, but it automatically counts down if you have it on and aren't even talking. I wish it would only count minutes where you're actually talking or not on mute.”

Krisp Review, Tai H.

5. Otter.ai

Otter.ai derives meaning from every conversation you have. It’s a leading speech analytics and collaboration tool that connects team members based on what they say. It also integrates with leading video conference tools like Zoom, Microsoft teams, and Google Meet.

What do users like best?

“I have to interview people and write articles for work. I love using Otter to record and transcribe my interviews. This saves me hours of tedious work and lets me do more of the enjoyable and creative aspects of my job.”

Otter.ai Review, Gray G.

What do users dislike?

"The ability to label different speakers is useful, but this is one spot where AI isn't as good. I often get back-and-forth between two or more speakers lumped as one.”

Otter.ai Review, Patrick H.

Alternative solutions for voice recognition software

Based on the flagship voice-based assistant you aim to develop, the backend software requirements can change. Here are some alternatives to consider if you are working with different kinds of audio transcription.

1. AI Chatbot Software: AI chatbots are trained on effective deep learning algorithms to engage in dialogue-based interactions with human users. The self-evolving natural language processing (NLP) and natural language understanding (NLU) enables computing systems to contextualize queries, relate to user sentiments and forward them the right resolution. AI Chatbot software is an advancement into the world of voice and text automation and has made query resolution simpler and effective.

Top 5 AI Chatbot Software in 2024

* Above are the top five leading data labeling software from G2’s Spring 2024 Grid® Report.

2. Conversational intelligence software: Conversational tools are used to analyze, transcribe and document sales calls. This tool uses machine learning to extract meaningful data, rule out major sentiments and buyer pain points and generate summary for sales executives and business development reps. Conversational intelligence software gives you the right hard hitters to connect better with your prospects and close deals faster.

Top 5 Conversational Intelligence Software in 2024

* Above are the top five leading conversational intelligence software from G2’s Spring 2024 Grid® Report.

3. Intelligent virtual assistants software: These tools act as digital employees or live support agents that are built on expert systems to provide quick resolutions to customers and prospects. Unlike chatbot tools, this software uses convivial techniques to build strong rapport with customers and drive them towards brand trust and loyalty. They solve users' challenges, read support emails and escalations, route calls to the right department, and build on their vocabulary to be more succinct in future conversations.

Top 5 Intelligent Virtual Assistants in 2024

* Above are the top five leading intelligent virtual agents from G2’s Spring 2024 Grid® Report.

Clear your throat and speak your mind

Whether you're overcoming writer's block, getting out of a sticky situation, or juggling multiple tasks, voice recognition has your back. With consistent experimentation in AI, voice recognition technology will soon eliminate all barriers to human-computer interaction.

Learn how voice assistants are raging in the tech marketplace and are one of the most popular industrial breakthroughs for software vendors and buyers.

Shreya Mattoo

Shreya Mattoo is a former Content Marketing Specialist at G2. She completed her Bachelor's in Computer Applications and is now pursuing Master's in Strategy and Leadership from Deakin University. She also holds an Advance Diploma in Business Analytics from NSDC. Her expertise lies in developing content around Augmented Reality, Virtual Reality, Artificial intelligence, Machine Learning, Peer Review Code, and Development Software. She wants to spread awareness for self-assist technologies in the tech community. When not working, she is either jamming out to rock music, reading crime fiction, or channeling her inner chef in the kitchen.