Shipping faster, building momentum, and growing market share is the dream of any enterprise leader.

But infrastructure constraints like legacy server management is one of the many things that stop them from fulfilling those dreams. As a developer, you want to build and ship products faster, but end up worrying about server provisioning, scaling, and maintenance. Going serverless doesn’t only end these problems but also helps you go to market faster in the most cost-effective way.

Serverless architecture removes server management tasks so developers like you can focus solely on business logic and code. As a result, you scale applications faster, improve capacity planning, and adapt to changing business needs. Keep reading to learn the basics of serverless architecture, its importance, use cases, and how server virtualization software supports serverless functions.

What is serverless architecture?

Serverless architecture is a cloud computing execution approach for building applications without having to manage server infrastructure. It’s suitable for developing event-driven applications, application programming interfaces (APIs), loosely coupled architectures, and microservices.

In serverless architecture, cloud service providers manage database, server, and storage systems when it comes to scaling, maintenance, and provisioning. They also make operational efficiency better with resource allocation, load balancers, and code deployment.

Moreover, software development kits (SDKs), command-line interfaces (CLIs), and integrated development environments (IDEs) from service providers make it easy to code, text, and deploy serverless applications.

Why use serverless architecture

Developers and enterprises looking for solutions to bottlenecks in workflows or ineffective software maintenance may find the answers – and more – by adopting serverless architecture. Businesses can potentially:

- Fast-track development cycles by focusing on the code instead of the underlying logistics.

- Experiment and innovate faster since developers don’t have to worry about networking, server provisioning, or idle resource cost.

- Distribute workload more efficiently with the help of automatic scaling that minimizes resource waste and enhances the end user's experience.

- Reduce operational costs as cloud service providers charge you based on actual resource consumption.

- Enjoy hassle-free maintenance with upgrade and patch management support from cloud providers.

- Stay agile with event triggers allowing apps to offer real-time reaction to user inputs.

How serverless architecture works

Serverless architecture works by abstracting server management and letting developers write codes in response to event-triggered functions like hypertext transfer protocol (HTTP) requests or data changes. Cloud service providers execute these functions in isolated environments, meaning developers don’t have to worry about server scaling or provisioning.

For example, an e-commerce company using serverless can easily handle the checkout process when customers place orders on its website. In this case, the order event triggers a serverless function that estimates shipping costs, processes payment via a payment gateway solution, and updates the inventory. A cloud provider automatically performs the entire function in an isolated environment.

Did you know? Function as a service (FaaS) is a popular serverless architecture that allows developers to write application codes as a set of discrete functions that perform specific tasks when triggered by an event. In 2014, Amazon Web Services (AWS) Lambda launched as the first FaaS platform.

The development team can spend more time refining the serverless function logic, writing the payment processing code, integrating inventory management databases, deploying functions, and troubleshooting issues.

How do serverless or FaaS differ from PaaS?

Serverless and FaaS remove server management tasks and let you focus on event-triggered functions. Platform as a service (PaaS) is a cloud-based service model that offers developer tools and deployment environments so you can have more control over app components. FaaS is suitable for building event-driven applications, whereas PaaS is ideal for broader application needs.

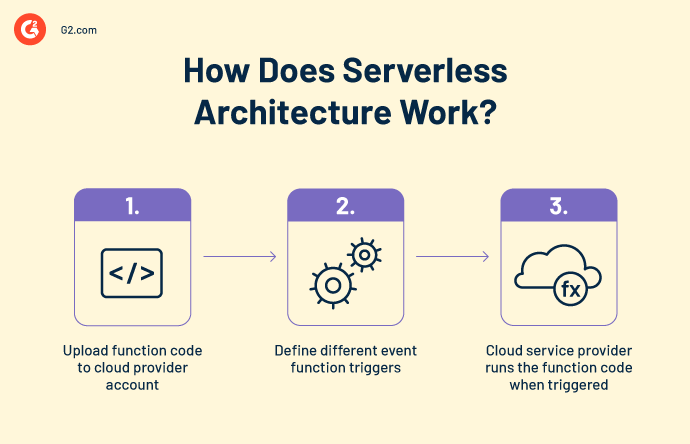

How a serverless architecture works, step by step

Typically, a serverless architecture goes through these stages.

- Code deployment involves developers writing codes along with behavioral logic for different functions.

- Event triggers are user activities like database changes, file uploads, and HTTP requests, which set off different functions.

- Function invocation happens when a cloud provider’s event-driven architecture invokes a function in response to an event.

- Isolated execution is interference-free. It happens in an environment offered by the cloud provider.

- Automatic scaling increases or decreases the total number of functions based on the incoming workload.

- Resource allocation helps execute functions with resources such as memory and central processing units (CPUs).

- Execution and response mark the end of data processing, response generation, and interaction with services. Serverless databases or caches store additional contextual information during the execution.

- Output is the final response to the request. It gets shared with whoever invoked the function at the beginning.

- Resource deallocation happens as the cloud service company returns the resources from the function.

Fundamental serverless architecture components

Cloud providers use different terminologies to explain how their architecture works, but these serverless terms are common in most scenarios.

- Invocation: the act of executing a single function

- Duration: the period a serverless function needs for execution

- Cold start: the latency period that occurs after an inactive period or right after a function triggers for the first time

- Concurrency limit: the number of function instances an environment is able to simultaneously run in one of its regions

- Timeout: the time limit for how long a function can run before a cloud provider terminates it

Want to learn more about Server Virtualization Software? Explore Server Virtualization products.

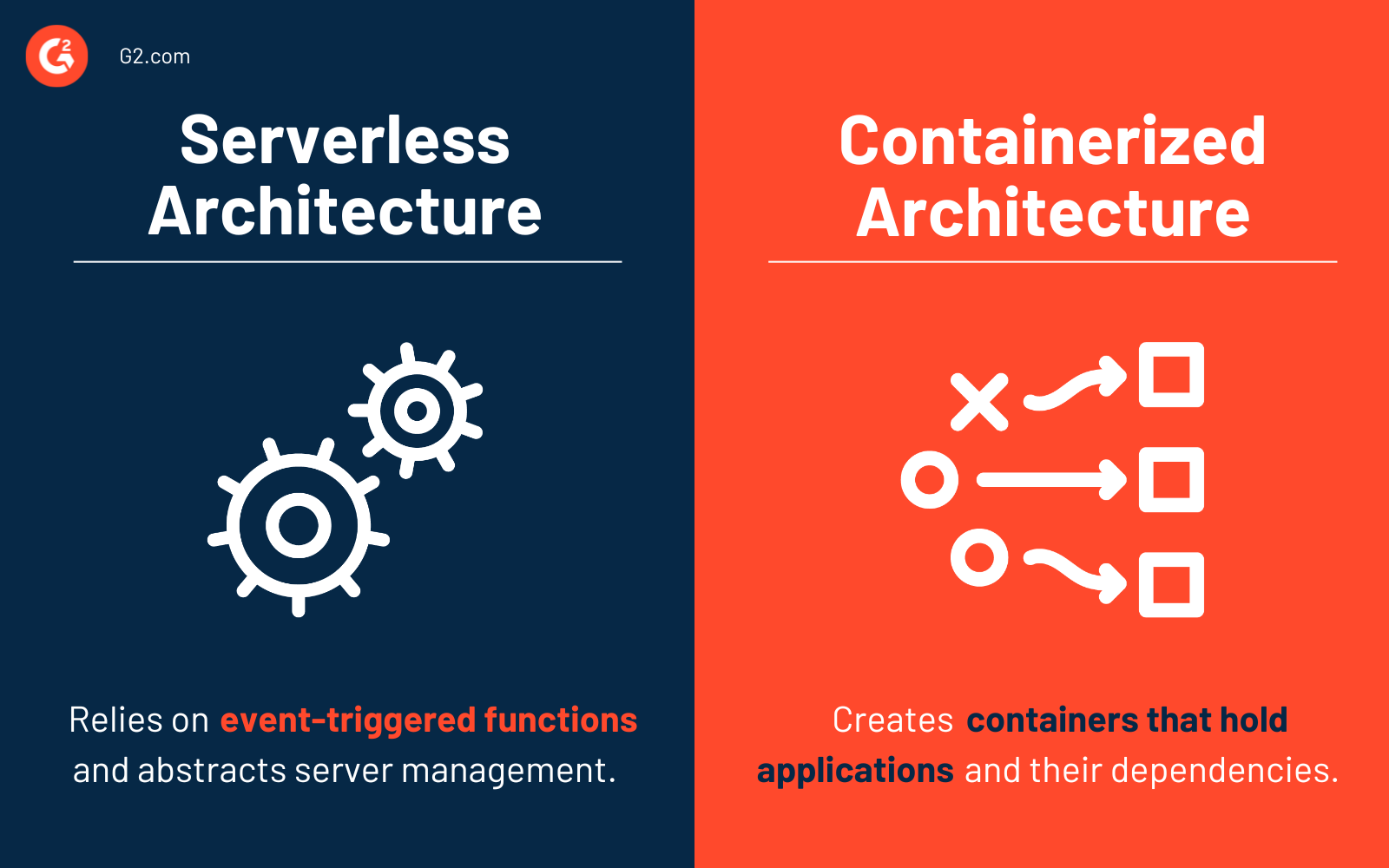

Serverless architecture vs. containerized architecture

The key difference here is that serverless architecture depends on event-triggered functions and abstracts server management, whereas containerized architecture creates containers that hold applications and their dependencies. Architecture containerization requires developers to manually manage container orchestration tools.

Containerized architecture employs container-based virtualization to combine applications and their dependencies into isolated containers. As a result, developers can be sure of consistent execution, regardless of environments. Companies use container registry software like Docker and orchestration systems like Kubernetes to manage, scale, and simplify application deployment.

| Serverless architecture | Containerized architecture | |

| Abstracts | Server management functions | App dependencies only |

| Scales | Functions | Container clusters, but with manual effort |

| Functions as | Event-triggered units | Isolated containers |

| Fee | Pay-as-you-go pricing model | Resource and runtime costs |

| Efficiency | Significantly improved | Moderately improved with overhead per container |

| Use cases |

Microservices, event-driven apps, and APIs | Complex applications with varied dependencies |

| Best for | Unpredictable and scaling workloads | Managing resource requirements consistently |

Serverless vs. microservices: Key differences

Microservices divide applications into independent services communicating via APIs, meaning you can easily control service components. However, you also have to manage the infrastructure.

On the other hand, serverless architecture focuses on event-generated functions with server management abstraction. While both are popular app development approaches, microservices are best suited for complex applications, whereas serverless suits apps with scaling workloads.

Benefits of serverless architecture

Serverless architecture offers enterprises multiple benefits, including scalability, cost-efficiency, faster time-to-market, and flexibility for designing event-driven applications.

- Cost-efficiency: Traditionally, companies using servers always provisioned resources based on expected workload. The result was either underutilization or overprovisioning. Serverless computing lets companies enjoy dynamic resource allocation with automatic scaling. Consequently, companies save money because they don’t pay for idle resources.

- Improved productivity and faster time-to-market: The best part of serverless is that a cloud platform handles everything related to the underlying infrastructure. Companies can focus on refining codes and deploying them faster. Plus, the lack of infrastructure management responsibilities helps DevOps teams debug faster, collaborate better, and respond to market demands swiftly.

- Reduced routine maintenance tasks: Serverless architecture promotes zero server management, meaning companies don’t have to waste time on enhancing application reliability, tacking hardware failures, or relying on server-related jobs. The cloud provider is responsible for all routine maintenance tasks, like security configurations, software updates, and patch management.

- High availability and scalability: Enterprises love serverless because it can support fault-tolerant and highly available services. With a cloud provider’s failover and disaster recovery systems, your system will stay functional even in case of hardware or other failures. Serverless computing platforms also feature auto-scaling capabilities, enabling you to deliver optimal performance during traffic spikes or changing workloads.

- Better user experience: Serverless platforms improve user satisfaction by reducing latency and delivering responses almost immediately. This flexibility is extremely important for user-facing apps like internet of things (IoT) devices, games, and live-streaming apps. It comes from serverless architecture’s ability to integrate with different sources and process data from these systems.

Challenges of serverless architecture

Despite its scaling capabilities, serverless architecture poses numerous challenges, such as vendor lock-in, cold start latency, and a lack of control over infrastructure. Organizations usually address these challenges by reducing their dependency on single vendors and evaluating application requirements at the onset.

-

Cold start latency: Serverless architecture follows dynamic resource allocation after function invocation. At times, it experiences a cold start, especially when a function triggers for the first time or after being inactive for a while. This backend delay of resource provisioning, dependency loading, and runtime environment initialization creates latency.

As a result, users may experience unexpected delays on the front end. Consider speaking to your vendor about warm-up techniques and optimizing a function’s initial process to avoid latency. - Limited execution time: Functions have execution time limits on a serverless platform. For example, you can configure each AWS Lambda function's execution time to 15 minutes. Similarly, Azure functions have a 5-minute time limit. This restriction poses challenges to development teams working on complex, time-consuming data processing tasks or computations. In such cases, consider redesigning applications so they can efficiently complete tasks under the time limit.

- Vendor lock-in: Enterprises sometimes rely on a single cloud provider’s services and APIs for convenience. However, every provider follows proprietary integrations, deployment models, and services. As a result, organizations may experience difficulties while switching to an on-premises environment or migrating to another cloud service in the future. Using standardized APIs for app development and opting for a multicloud strategy can help companies address these challenges.

-

Lack of control and resources: Since serverless services remove the underlying infrastructure, it may not be possible for organizations to customize or control the environment.

For instance, you may not be able to adjust networking setups or runtime configurations to meet specific requirements. Further, the stateless design of serverless doesn’t suit applications that need continuous connections or shared states between execution. You can tackle this resource constraint with storage solution integration, but you’ll end up making the system more complex. - Security challenges: Serverless follows a shared responsibility model in which developers secure the application code and cloud vendors protect the infrastructure from threats. Besides limited control over the environment, organizations struggle with the risks of sharing the infrastructure with other companies. That’s why it’s important to adopt security measures, like vulnerability assessment, encryption, and access control methods.

Apart from all these challenges, serverless may pose a learning curve for developers who have previously worked with traditional architecture. As a result, organizations may experience slight delays with initial projects and timelines.

Serverless architecture use cases

Serverless architecture is ideal for IoT data processing, task automation, data pipeline creation, web app development, and data streaming.

- Web applications: Developers use serverless platforms like Google Cloud and Microsoft Azure Cloud Services to build web apps that serve dynamic content based on user inputs. Serverless is also the go-to choice because of its ability to scale resources automatically and prevent downtime even during traffic spikes.

- Batch processing: Enterprises use serverless to create data pipelines, perform extract, transform, and load (ETL) tasks, and execute data transformation and file processing. For example, they can design functions that trigger on-demand data processing while minimizing resource usage and operational costs.

- IoT data processing: Serverless architecture is also suitable for processing IoT sensor data, social media feeds, and user activity logs. Companies can design event-driven triggers to ingest, process, and analyze data, as well as initiate subsequent tasks. This process accelerates data insights delivery, helping companies to make decisions faster.

- Continuous integration (CI) and continuous delivery (CD) pipelines: Companies often resort to serverless functions for automating different parts of their CI/CD pipelines. For example, serverless can automatically trigger functions and start the build process as a response to code commits. It also has the ability to automate staging environment deployment and resource provisioning. As a result, enterprises don’t just enhance development workflows, they improve software delivery processes.

- Task automation: Companies use serverless to automate scheduled tasks without relying on dedicated server resources. For example, they develop triggers that initiate data synchronization, backups, and database management tasks at regular intervals.

Serverless deployment applications

Other popular ideas for serverless apps include:

- Chatbots

- Mobile apps

- RESTful APIs

- Virtual assistants

- Image and video processing

- Serverless data warehousing

- Asynchronous task processing

Tools for serverless architecture

Deploying a serverless framework requires enterprises to use a combination of software solutions and services. Below are the prominent ones they commonly use.

- Cloud computing platforms cater to your infrastructure requirements with computing resources, storage, and network solutions.

- API platforms help organizations manage the API gateway lifecycle, authentication, endpoints, and rate limiting.

- Version control software solutions let developers track software project changes and collaborate for changing codes.

- CI/CD tools automate serverless application development, testing, and deployment.

- Log monitoring systems scan and track application, server, and network log files for security and performance issues.

- Encryption solutions help companies protect data at rest, in use, and in transit.

- Server virtualization platforms are used to create independent virtual servers from physical servers.

- Integrated development environments (IDEs) allow programmers and developers to write, test, and debug serverless codes from a single place.

Rewrite the code with serverless

When innovation is the currency of progress, traditional methods are no longer an option. If you’ve been managing infrastructure manually, you know the downsides of doing it all in-house. Serverless keeps you free from worrying about resource allocation, provisioning, or maintenance costs.

Instead, it gives you the freedom to focus on your company’s evolution. Whether you’re just starting to build that next app with Java or create a digital marvel using Python, a serverless environment is the best way to get there.

Ready to innovate with serverless? Check out the top infrastructure as a service (IaaS) providers offering the best cloud-hosted, enterprise infrastructure.

Sudipto Paul

Sudipto Paul is a former SEO Content Manager at G2 in India. These days, he helps B2B SaaS companies grow their organic visibility and referral traffic from LLMs with data-driven SEO content strategies. He also runs Content Strategy Insider, a newsletter where he regularly breaks down his insights on content and search. Want to connect? Say hi to him on LinkedIn.