Depending on who you ask, AI is our salvation, the apocalypse, a novelty, an industry-changer, or a fad. Between virtual writing assistants, tackling barriers to AI in supply chain software, and meeting scheduling bots, there’s no industry unbitten by the machine learning bug. But HR has always been about the people. When the “people industry” gets automated, people get nervous.

In this article, we’ll cover how AI technology pervades HR, where it’s already present in the department, the ethical concerns, and how we can address them. Scroll to the preferred section or read on for the whole ride as we ask one crucial question: How is generative AI changing the face of HR, and what ethical questions should we prepare for as this technology advances?

Where is AI present in HR?

AI is already present in HR, influencing practically every category, from recruitment marketing to employee engagement.

Chatbots, virtual assistants, and automation at every level can potentially change the jobs of recruiters, administrators, and L&D professionals alike. Writing and analyzing language and text is a huge part of HR, and having machines do it instead of humans frees up time and makes a process that was once based more on gut instinct into a simple mathematical formula. As you can see, AI in HR has a far-reaching impact.

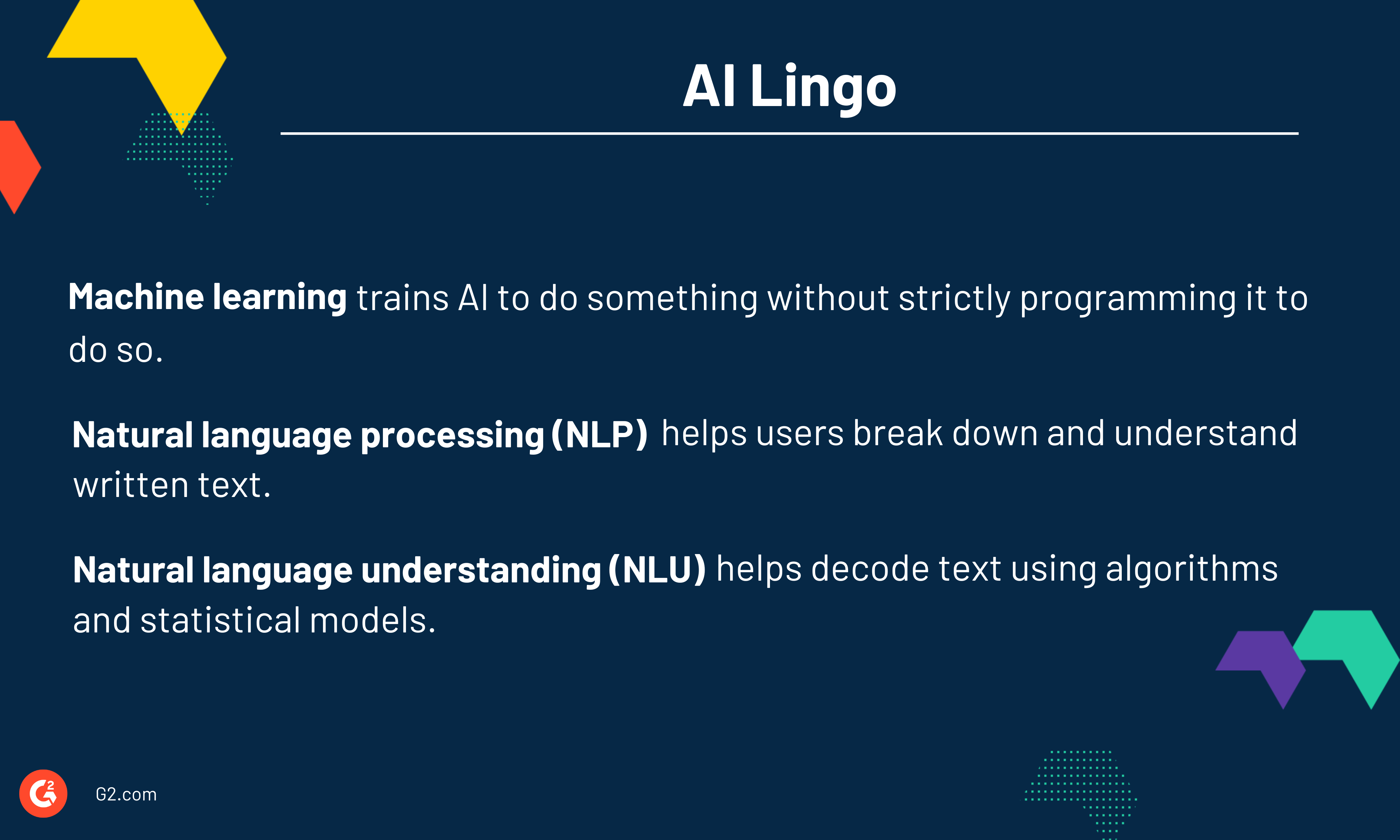

Before expanding further, let’s understand some common terms associated with AI.

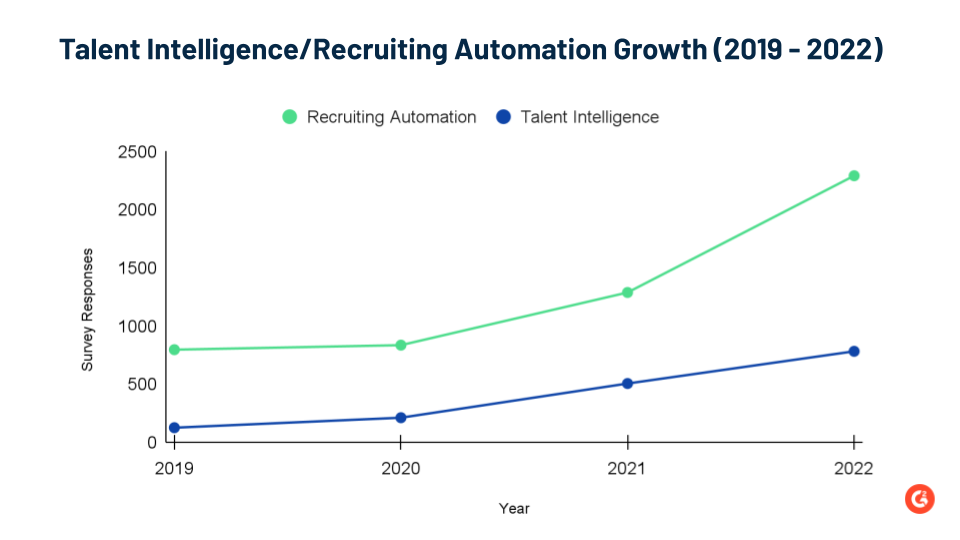

There is currently no section in G2 that maps AI in HR. However, there are two categories where it shows up a lot. The first is the Talent Intelligence Software category defining how AI revolutionizes talent management and acquisition. The second is the Recruiting Automation Software category, which has long taken the act of sourcing people and automating the process.

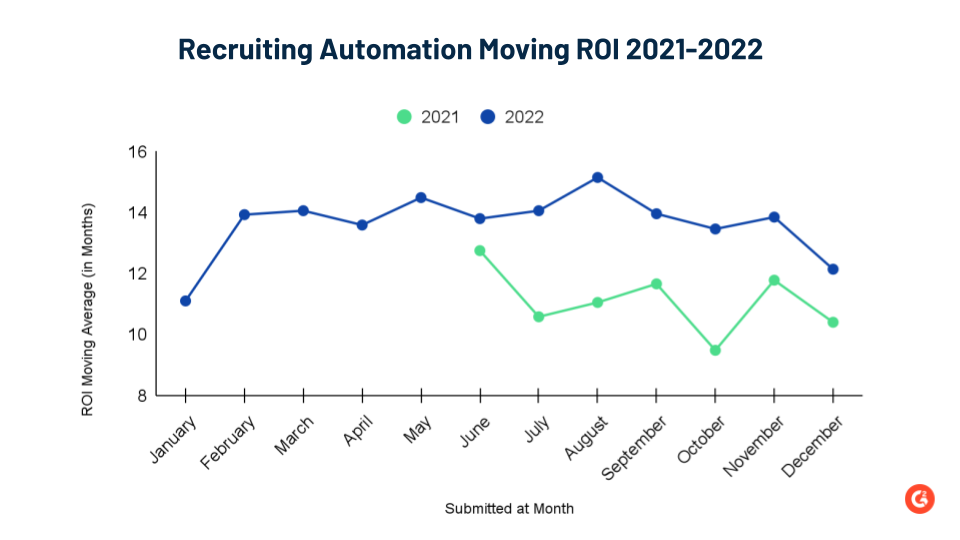

Both categories have experienced exponential growth in their review numbers, with Recruiting Automation rising about 188% and Talent Intelligence growing around 526% since creating the G2 categories in 2012. This was especially true between 2021 and 2022.

We also see a heavy presence of AI in the G2 category Talent Intelligence. Talent Intelligence is defined by solutions that use AI to better source, match, or understand workers and their skills. We’ve seen the importance of this in the marked growth of the category. Talent Intelligence has added so many products in the last 12 months, it’s in the top 24% for G2 trending categories.

As we look further into how machine learning revolutionizes HR, let’s turn to something the internet (and AI) struggle with—nuance.

Text analysis and generative AI in HR

Here, we will focus on HR and its connection to generative AI, i.e., AI that can create, write, analyze, and refine text. Generative AI produces new content—text, images, etc.—after being trained on large datasets.

OpenAI’s model ChatGPT has been dominating the news lately, but multiple synthetic media software solutions offer similar functionality, including WriteSonic, Jasper, and Anyword.

Ultimately, this AI creates new text based on a specific prompt.

And yes, it’s everywhere in the news. You may remember CNET recently got in hot water over creating new articles for its site without disclosing an AI wrote them. But we’ll go over the ethical concerns of AI later.

Where does this type of AI show up in HR? The short answer is, well, everywhere. Some technology use is more theoretical or one-off, while others have already integrated it into their tools.

Watch now: G2 analysts Grace Savides and Matthew Miller discuss how NLP is revolutionizing the HR field →

How does text analysis connect with HR processes?

HR often uses NLP and NLU to accomplish automation summarization, which is the ability to quickly understand and decipher a body of work.

This, in turn, allows them to achieve two important goals:

- Sentiment analysis: Examines the tone

- Overall feeling: Pulls out how users feel about the text

Net promoter score (NPS) surveys, for example, use this technology to pull out where workers are feeling stressed or overwhelmed and identify pressure points at work.

Other than this, other tools incorporate generative AI and text analysis into their software through various means. Here are a few examples of both potential and real-life use cases.

Recruiting software and talent acquisition

Automation forever changed job description management software, allowing recruiters to select templates to create job descriptions quickly. Now, generative AI can create job descriptions from scratch with a few quick prompts. Talent acquisition is seeing more and more offer letters pre-generated, saving time and money. LinkedIn, for example, recently launched a writing assistant for job descriptions and profiles.

Some of the most advanced uses of generative AI come up in recruiting automation software and talent intelligence software. It can create communications, emails, messages, and all other touchpoints, automate them, and learn to create a template for an ideal candidate over time. From there, it measures all candidates against this dream candidate. The solution SeekOut uses AI to aid in recruiting.

Given that it took an average of 15.61 months before G2 users reported receiving a return on their investment from recruiting automation in 2022, it’s clear that the use of AI to make better matches can really impact the bottom line.

Performance management and employee engagement

Performance management is where the analysis side of machine learning comes in. Users can analyze previously written communication between managers and workers to identify themes and recurring patterns. When a manager has to write dozens of pieces of feedback, they can lean on generative AI to get them started or give them a rough draft. The same can go for peer reviewers in 360 feedback software. The solution 360Learning has already implemented generative AI to aid in providing feedback.

Lastly, measuring NPS and using sentiment analysis has long been a staple of employee engagement. It pulls out the relevant, recurring strengths and weaknesses from thousands of statements so leadership teams can see where an organization thrives or fails.

DEI and people analytics

One of the most important uses of text analysis is when it comes to DEI. Bias is often unconscious. Using NLP to analyze past communications can highlight unnecessary gendered or biased language, show patterns when there are differences in communication between users of different ages, races, or other protected identities, and help illuminate blindspots where communications get stalled. Sentiment analysis examines where language gets heated, or emotion runs high in an organization so that users can troubleshoot social problems.

Tip: Optimize your talent generation pipeline with the latest competitor data from G2's Talent Intelligence category.

Want to learn more about HR Analytics Software? Explore HR Analytics products.

Potential ethical issues from AI in HR

Now that we’ve looked at the data and use cases, it’s time to dive into the big question of this article. AI and ethics have been huge topics. What are the concerns specific to HR?

Is your computer being truthful?

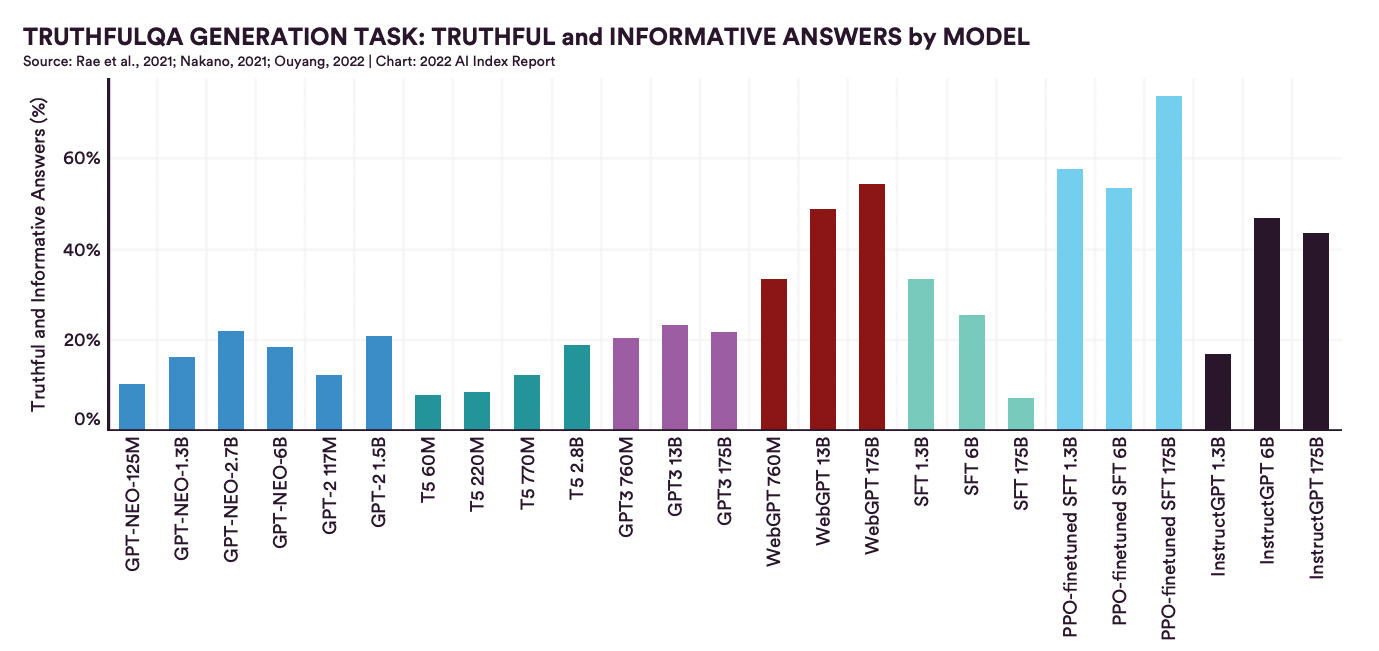

According to the Stanford University Artificial Intelligence Index Report 2022, generative AI’s truthfulness varies wildly depending on the model, but most are truthful less than 40% of the time.

Source: Artificial Intelligence Index Report

While this number varies depending on the model, all but one from the models reviewed were truthful less than 60% of the time. One of the reasons for this is that generative AI models don’t always have the most up-to-date information, which can be seen in this exchange between a beta user and the Bing chatbot. The user asks for Avatar 2 showtimes, but Bing says it hasn’t come out yet. When the user tries to correct them, the AI gaslights them and asks them to apologize.

All of this is pretty funny until you think of it as arguing over a more important topic, like whether or not an employee has used all their sick days or who’s covered under a benefits plan. Remember, an AI is only as good as the data set it’s trained on, and sometimes that dataset isn’t aware of what year it is.

Workers and AI-created content

Since generative AI hit the scene, recruiters have dealt with a wave of computer-created resumes and cover letters. The industry seems split on this, with some saying it could cost a candidate a job and others not minding. Mass-produced emails could be an easy way to cut the excess from your day, but missing details like people’s names could land you in hot water. Above all, there’s the old question of plagiarism regarding more personalized work like thought leadership or other areas where using AI could land you in a world of hot water.

People expect HR to lead. Turning to AI when you need to write every difficult email, heartfelt speech, or even something for a conference or broader audience lessens your ability to think on your feet. If it gets back that an HR leader is using an AI to do their thinking for them, it ultimately compromises trust and authenticity.

Content ownership

This is something to consider as a company comes with AI ownership.

This question is more prevalent in the AI art world, which is rife with copycats, but this extends to all workplaces. If you create an asset with AI, do you own it? Or is it owned by the creator of that algorithm? Deciding whether or not something an employee wrote or created during business hours falls under the purview of an organization can already be a legal issue. Add AI into the mix, and you’ve got a new world of trouble.

Biases

How was your AI trained? Do you know? If it was trained by a human being (which it was, at least for the next few months), it was trained by someone with biases. There have already been cases of AI replicating the same mistakes of humans prioritizing male candidates. One AI trained on white and black names showed biases toward other names. The study “Does AI Debias Recruitment? Race, Gender, and AI’s “Eradication of Difference” from Cambridge University Researchers show that AI doesn’t necessarily reduce bias.

When an AI learns from a dataset, it picks up on biases the researcher may not and unknowingly replicants them.

Diversity recruiting is already an area that often combats unconscious bias throughout the hiring process. Unfortunately, outsourcing these duties to a digital assistant doesn’t mean eliminating human flaws in the process. It’s important to know exactly how the AI operates.

Replacing humans

The truth is AI replaces HR jobs. Or, at the very least, replaces HR tasks. Whether writing individual job descriptions or reading through resumes, the industry is becoming more efficient and requires fewer humans.

Ignoring the AI revolution isn’t an option, but forgetting about the humans at the center costs even more.

Avoiding ethical pitfalls in the ChatGPT era

Now that we have a clear view of potential problems, how do we tackle them?

Retain and retrain

If you ask anyone what they love about their job, the tech stack won’t be on top of the list. People like working with people. Their coworkers and manager make or break the environment and make them leave or stay.

Most of all, they want to feel valued. Is their company investing in them? Seeing a coworker replaced by AI is the quickest way to make someone start looking for the door. Don’t think about AI in terms of job replacement but in terms of task replacement.

Here’s the truth. We’re in the middle of a labor shortage that could persist for years, according to SHRM. As baby boomers retire in droves and the skills gap widens, the industry is short-staffed as it is.

One staffing model that’s gaining popularity is the hire-train-deploy method. These are agencies that often work for big companies like Apple, Amazon, and other tech companies that require high-level skills. They are also companies who may be looking for specific industry experience but know those individuals won’t have the tech to back them up.

Companies pay to train and use employees on a by-project basis, sometimes hiring them up for a couple of years. Once they reach the end of that period, an organization can hire them full-time.

In addition, the growing popularity of reskilling options in skills management software shows multiple alternatives to close an organizational skill gap while keeping costs down.

Make a plan

Does your organization have guidelines for using AI-generated content? What about making calls on tasks replaced by AI? Are you okay with workers using AI to write and read for them? What kinds of text analysis automation are you currently using, and do you know how it was trained?

Certain algorithms can help determine when the writer of something was trained using an AI. As for existing AI, some are more transparent than others regarding their sources. Do you know the liabilities your organization is opening itself up to with specific partners? If this isn’t already a conversation that’s happening in the office, it’s time to make a change.

Take accountability

Sometimes, companies are faced with difficult choices. Not every change is weathered with a full staff. If that’s the case, be honest. AI may be contributing more and more to business, but it’s still humans at the top making decisions to hire and fire the workforce.

If there’s a situation where you have to replace your staff, be clear that it was a decision the company made. The C-suite is still flesh and blood for now, and the only way forward is by communicating clearly.

Focus on transparency

As CNET learned when they quietly replaced writers without telling anyone, people get mad when you don’t tell them an AI wrote something. Or when they interact with an AI, not knowing it’s a human.

Recently, my colleague discussed the ethics of employee monitoring software in his piece, “Who Watches the Watchmen? Legislators Set to Limit Employee Monitoring Software.” In it, he discussed the importance of transparency. Do your workers and customers know when they’re interacting with an AI and when it’s a human? If not, that’s a real problem.

The future is now

Given the complicated nature of AI, how well are we to tackle these ethical issues? The immediate future presents problems. In March of 2023, Microsoft laid off a good chunk of its ethics and society team. It went from around 30 (at its height in 2020) to seven. Similar cuts are happening at Google and Twitch.

We are also seeing the beginning of regulation of the technology. A new law in California forces chatbots to disclose their synthetic status. The FTC uses three different rules to prevent the sale of racially-biased algorithms. Meanwhile, the EU has passed the AI Act, which can put AI in one of four at-risk categories, and China rolled out a series of regulations of its own. Besides California, there are laws in the U.S., like Local Law 144, which prevents employers from using AI to screen employees for promotion or hiring unless they’ve audited the technology first.

AIs don't replace people; people do

This also makes it all the more important to focus on accountability in this ChatGPT era.

As legislation struggles to catch up, companies must take the ethical use of AI into their own hands. This starts with accountability and transparency in the most people-driven department of all: HR. Companies have two choices: rise to the challenge or get swept under the wave.

Grace Savides

Grace Savides is a Senior Research Analyst who loves discussing all things HR. She enjoys exploring where the theory, policies, and data-driven side of the industry interacts with the unpredictable and ever-important human elements. Before G2, she worked in content marketing, social media, health care, and editing. She dedicates her leisure time to video games, painting, DND, and spending time with her wonderful boyfriend and two dogs.